A Rickety Stairway to SQL Server Data Mining, Algorithm 3: Decision Trees

by Steve Bolton

This series of self-tutorials is an elementary-level introduction to SQL Server Data Mining (SSDM), not the product of someone who’s done postgraduate work in statistics. Instead of being the last word on the subject, it is a little more like a child learning to speak its first words; yet I suppose it does have some worth, since there are so few other words being spoken about one of SQL Server’s most under-utilized features. Perhaps all this series proves is that a would-be DBA with a childlike understanding of statistics can still get substantial benefit from it. Like every kid who is now able to take his first steps up a stairway, we would like to reach the clouds like Jack did on his beanstalk – but to get to the next level we’ll have to climb Decision Trees, which has branches of an entirely different kind.

The third of Microsoft’s nine SSDM algorithms isn’t difficult to understand, since it builds on the two simpler data mining methods covered in my last posts, A Rickety Stairway to SQL Server Data Mining, Algorithm 1: Not-So-Naïve Bayes and A Rickety Stairway to SQL Server Data Mining, Algorithm 2: Linear Regression. As discussed in more depth in my initial post, A Rickety Stairway to SQL Server Data Mining, Part 0.0: An Introduction to an Introduction, with statistical analysis of this kind data must be differentiated by its meaning to a much greater extent than in the relational database world. In SSDM this is determined by its Content type, which can be one of several values: Key, Key Sequence, Key Time, Cyclical, Ordered, Discrete, Discretized, Continuous and Table. For an in-depth discussion of this issue, see A Rickety Stairway to SQL Server Data Mining, Part 0.1: Data In, Data Out, which also sheds some light on how to interpret the results SSDM returns. Decision Trees is limited to using to the Key type, which is equivalent to a primary key, which each mining structure must have; the nested Table type, which I will defer discussion for several weeks due to my misadventures with it in A Rickety Stairway to SQL Server Data Mining, Part 0.2: How to Dig Out of a Data Mining Cave-In; and the Cyclical and Ordered types, which are allowed but treated as Discrete, as is the case with most SSDM algorithms. The really important Content distinction with most of these algorithms is between columns marked with the Distinct type and those that are Continuous. The former indicates that the data represents a state or object, such as a GUID, database file, or class label, while the other signifies a numeric range of some kind. Rank and order are implied with the second but not with the first. Getting these distinctions right is imperative with data mining, because the algorithms are logically limited in the Content types they can accept and the means available to process them; these strictures are baked right into the mathematics that make the algorithms possible, rather than being some artificial limitation particular to Microsoft’s code. One of the major limitations of Naïve Bayes is that it can only handle Discrete data. To deal with data that is naturally Continuous, the data must be converted to the Discretized type by dividing it into buckets. The drawback is that numeric data loses its rank and order, so that a bucket of values from 1 to 50 is treated on the same par as a bucket of values from 1,000 to 5,000. Linear Regression has the opposite problem: all of the attributes fed into and returned from it must be labeled as Continuous, which means that data that pertains to a state with no rank or order cannot be treated as Discrete.

I suspect this is why the trials in the last two posts did not turn up some of the relationships that would be expected with the IO data we’ll be working with in this series. In post 0.2 I explained the methodology of the trials and provided the schema I’ll follow, which uses data taken from about three days of polling dm_exec_query_stats, dm_io_pending_io_requests, dm_io_virtual_file_stats, dm_os_performance_counters, dm_os_wait_stats and sp_spaceused every minute, in the hopes of intentionally causing IO pressure in order to study the topic further. As discussed in the last two posts, I cut back (for the moment) to three denormalized views in which I left joined dm_io_pending_io_requests, dm_io_virtual_file_stats, dm_os_performance_counters and a parent RecordTable (which tracked time) to sp_spaceused, dm_os_wait_stats and dm_exec_query_stats respectively, which provide 142,080, 7,684,160 and 3,887,072 cases (i.e. row counts) respectively for our trials. Many of the relationships one would expect between the performance measures these system views returned emerged instantly, which confirmed that both algorithms were doing their job. A few other intriguing links were evident as well, as we shall discuss in due time. Even the absence of links dovetailed nicely with the limitations of both algorithms though. Before the trials, I expected a measure called MinuteGap from the parent RecordTable to evince strong links with some other measure of system performance, but there were no such links with Naïve Bayes – perhaps because it is a Continuous column that had to be discretized. On the other hand, the moderate relationships Naïve Bayes showed between other attributes and QueryPlanHashID, QueryTextID, PlanHandleID, QueryHashID and QueryStatsID were virtually non-existent with Linear Regression, quite possibly because they are all naturally Discrete measures which had to be treated as Continuous. In this week’s trial, both sets of relationships coexisted serendipitously, in all likelihood because of one of the distinct advantages of Decision Trees: it is the first of the Microsoft’s nine algorithms we will survey that can accept both Content types.

Refining the Results (i.e., How to See the Forest for the Decision Trees)

With Decision Trees, we are able to get the best of both worlds, thanks to the hard work of professionals in fields like mathematics, machine learning and psychology who continually refined Conceptual Learning Systems (CLS) a statistical approximation of human learning published by psychologists Earl B. Hunt, Janet Marin and Philip J. Stone in 1966.[i] In the late ‘70s this research was adapted into an algorithm called ID3 by John Ross Quinlan, the most prolific writer on the topic.[ii] In an academic research paper released in 1993 he further refined it into a more flexible version called C4.5.[iii] Many of Quinlan’s revisions introduced new means for taking certain types of data into account, such as missing values or Continuous predictable outputs. Another group of researchers from Stanford University published an important paper in 1984 on Classification and Regression Trees (CART), which the Microsoft algorithm makes use of for Discrete and Continuous attributes respectively.[iv] My data mining wishlist for the next version of SQL Server includes more in-depth explanations in the Books Online (BOL) technical references for each algorithm, because it’s sometimes difficult to tell which of the myriad variations of each Microsoft is using under the hood. For example, the documentation for Decision Trees includes the term “industry standard,” but it isn’t always easy to discern which of those standards is being used in SSDM’s internal calculations, especially if you’re a newbie like me. Perhaps some of it is proprietary and cannot be published, but the rest can probably be documented better. The Microsoft version must be applying CART, since the documentation refers to classification and regression trees; it is also likely applying some logic from C4.5, which has the advantage of being able to build trees that can have more than two branches at each juncture.[v] I am not sure, however, if Microsoft is using a welter of other refinements like the CHAID (which uses a chi-squared test), Gini indexes and the like that determine how the Decision Trees are created and discarded. I was able to glean from the documentation and from watching the models grow during processing that it is not applying newer bottom-up methods of generating trees.

Just as the basics of Linear Regression can be grasped by visualizing a scatter plot, so too can Decision Trees be thought of as a fairly simple statistical means of producing a flow chart. At each juncture, a probability is calculated to determine which path is most likely. Any implementation of the algorithm is shaped by three factors: the criteria used to decide which attributes will create splits in the tree, when to stop creating new branches and how to prune useless branches out. The goal is akin to Occam’s Razor, i.e. to squeeze the most information out of the smallest possible tree.[vi] That helps us avoid the twin plagues of overfitting, performance degradation and pointless information glut, which we have already seen repeatedly in this series. Like many implementations, SSDM recursively splits branches from the top down until it determines that no more information can be gained, in a process commonly referred to as Top Down Induction of Decision Trees (TDIDT) in the literature. Each predictable attribute starts with its own separate tree with a single top (All) node (there is no separate marginal stats node as you would see with Naïve Bayes). Depending on the Content types of the inputs and the predictable output they’re being compared against, SSDM picks attributes that it determines will have the highest information gain, then recursively splits the tree until no more branches can be generated. During this process, nodes are generated in the tree to represent both splits and leaf nodes, each of which has its own accompanying set of statistics. The methods by which SSDM calculates information gain may vary, but thankfully we’ve already covered them all in the last couple posts. If both the input and output are Discrete, then one of the three Bayesian methods used for feature selection is applied; if the input is Continuous and the output is Discrete, then former is discretized (the main reason to use the Discretized type with Decision Trees would be to guide the outcome in this particular situation, using the DiscretizationMethod and DiscretizationBucketCount properties as BOL says, but this will also discretize it for the continuous calculations); but if both the outputs and inputs are Continuous, then Linear Regression is applied. Keep in mind, however, that unlike with Linear Regression, a split may be created in the regression line instead of a single regression equation, depending on how it is scored. This basically generates regressions that form angles in a scatter plot instead of just a simple line. So at any given split, SSDM will either generate a classification tree based on Bayesian logic or a regression tree based on the kind of scatter plot logic discussed in the last post. This allows us to use both Discrete and Continuous inputs and outputs in the same model.

This in turn leads to mining results which are easy to understand; despite all the technical mumbo-jumbo above, it’s just a fancy way of calculating decisions that are very simple to diagram. For example, you come to a fork in the road. The probabilities say that there is a 75 percent chance your destination is to the left and 25 percent that it will be on the right; so in most cases, the simplest solution would be to go left. Judging from the sheer number of illustrations in the literature one of the most common uses seems to be medical diagnosis. For example, your funny bone hurts. There is a 75 percent chance you banged your elbow, a 24 percent chance you have tendonitis, a 1 percent chance that you’re having a thrombosis and zero chance that you have Ebola virus. Each outcome would represent a separate branch, each with its own stats. The algorithm is also useful when attributes are fewer in number and when processing speed is necessary, or when an understanding of the inner workings of the algorithms would help. I also wonder if they would be useful on hierarchies and other structures that naturally follow tree patterns, although I haven’t done any experimentation or read anything that would suggest that. On the other hand, the algorithm is prone to overfitting, especially when “equivalent descriptions” are generated for the same state or object, thereby causing useless redundancy. It is also unsuited to problems which cannot be easily described with simply AND/OR branches, such as XOR ideas. Attributes with many distinct values – such as a primary key or timestamp – may be disproportionately favored by certain methods of scoring information gain.[vii]

We don’t have to cover much new ground to explain how Microsoft’s implementation scores information gain. It essentially uses the same four methods of feature selection explained in the last couple of posts, particularly post 0.2 When a regression tree is created because the attributes are Continuous, the Interestingness Score is used, just as it is with the Linear Regression algorithm. When a classification tree is created because we’re dealing with Discrete data under one of the combinations of inputs and outputs described above, then SSDM applies one of the three Bayesian methods used for feature selection in many of the other algorithms. One of these is Shannon’s Entropy, a common method of mathematically discerning the information content of a set of symbols. The calculations involved are complicated (Microsoft provides the particular formula SSDM uses at the Books Online page on Feature Selection) but it is built on some concepts that aren’t difficult for amateurs like myself to follow. For example, it is a well-known principle of cryptography that symbols tend to occur in certain places and frequencies, such as the incidence with which words begin with the letter S or sentences start with the word The in English. Instead of applying these patterns to code-breaking, techniques like Shannon’s Entropy are used to tease out information content that already exists within data.[viii] Another key principle underlying it is so simple that even a caveman can understand it: you can’t get something for nothing. This ex nihilo limitation is akin to the Second Law of Thermodynamics, except that in this case the logical restriction is that you can’t generate information content out of thin air. Data mining is often treated as if it were magic, but it cannot add information that is not already present; for example, you’re not going to be able to reliably deduce the high temperatures for the last 30 days if your meteorological measurements are limited to wind speed.[ix] As discussed in previous posts, the other two methods are Bayesian with K2-Prior and Bayesian Dirichlet (BDE) Prior, each of which depends on methods of informing the algorithm of prior probability distributions that were published in a 1995 research paper by Heckerman, et al.[x]

As I’ve noted in past posts, it’s sometimes difficult with other algorithms to determine how much feature selection is going on under the hood with other SSDM algorithms, or which of the three Bayesian methods is being used when the columns are Discrete. I was able to verify that some of my attributes, such as WaitTypeID, were being eliminated in Linear Regression due to low Interestingness Scores, regardless of how I set the two parameters that are supposed to control feature selection, MAXIMUM_OUTPUT_ATTRIBUTES and MAXIMUM_INPUT_ATTRIBUTES. In this week’s trials I will leave them both set at their default values, as well as the mining model flags MODEL_EXISTENCE_ONLY, NOT NULL (which is unnecessary because our data shouldn’t have nulls) and REGRESSOR and the FORCE_REGRESSOR parameter.[xi] I touched on the last two briefly in my post on Linear Regression, where applying the latter to the WaitTypeID column resulted in a big increase in processing time without returning any useful information, i.e. the classic signs of overfitting. Thankfully, in Decision Trees we can control feature selection directly through the SCORE_METHOD parameter. The Interestingness Score is always used whenever a Continuous column is involved, but if we’re dealing with Discrete data we can set this to 1 for Shannon’s Entropy, 3 for Bayesian with K2 Prior and 4 for BDE Prior.

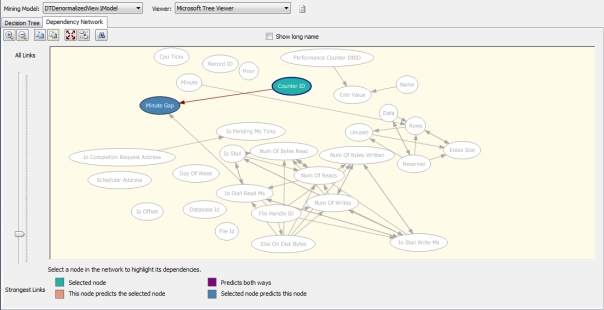

I experimented with this parameter on the first of my three views, which returned 142,080 rows when RecordTable, dm_io_pending_io_requests, dm_io_virtual_file_stats and dm_os_performance_counters were joined to the sp_spaceused table. This was a pittance compared to the 7,684,160 and 3,887,072 rows returned when they were joined to dm_os_wait_stats and dm_exec_query_stats respectively, which required some tweaking of other parameters in order to complete this tutorial before the crack of doom. Thirty percent of the data of each view was held out for training as usual. The first trial on my creaky old development machine took 2 minutes and 34 seconds when all of the other flags and parameters were left at their normal settings and SCORE_METHOD was set at its default of 4. About 100 megabytes was added to the SQL Server Analysis Services (SSAS) database size, which was fairly little compared to the other two structures. When set to 3 for Bayesian with K2 Prior, processing time was reduced to 2:24. Setting it to 1 for Shannon’s Entropy required just two seconds more. At first glance, I wasn’t able to detect any difference in the results returned by this structure’s three mining models. As usual, some of the expected relationships within the columns of a particular constituent table and between related measures were evident; for example, the columns Unused, Reserved, Data and IndexSize from sp_spaceused were closely interrelated, as we several measures of IO throughput. At long last, however, some expected relationships that were absent with Naïve Bayes and Linear Regression were evident with Decision Trees, such as CounterID from dm_os_performance_counters. As I wrote in past posts, the latter measure was added to the RecordTable to deal with a mystery that emerged during the data collection phase: the SQL Server Integration Services (SSIS) job was supposed to run every minute, but in practice the interval eventually changed to two minutes, then three, then four by the end of the trial. I was expecting MinuteGap to show some sort of relationship with other measures at some point, but did not expect the top link in all three of the models for the first mining structure to be between MinuteGap and FileHandleID. As shown in the Dependency Network in Figure 1, it was also strongly linked to CounterID. This suggests that if we dig deeper into the results using the Microsoft Tree Viewer, the Generic Content Tree Viewer and DMX queries, we might be able to relate the gap in the SSIS job to a particular type of performance bottleneck on a specific file. This is the kind of solid benefit a DBA can get from data mining with a minimal investment of time, energy, processing resources and know-how. In fact, the data mining process can be automated to uncover such useful relationships routinely, which might even aid the smartest performance gurus among our SQL Server MVPs, by at least taking some of the grunt work out of identifying bottlenecks and their causes.

Figure 1: Dependency Network for the First Mining Structure with SCORE_METHOD = 4

The mining structure based on sp_spaceused also turned up some unexpectedly strong relationships between IOCompletionRequestAddress with the IOPendingMSTicks, SizeOnDiskBytes and Unused columns, while the last of these was also closely related to SchedulerAddress. By looking up the specific addresses we could probably pinpoint other memory and thread bottlenecks that otherwise might not come to light. Some of the other unusual links included those between Minute and Rows from sp_spaceused, which might point to a change in the number of rows being generated in a database at a particular time of the hour, as well as between Name and CntrValue, which indicates that a particular database was strongly correlated to changes in performance. FileHandleID also had some of the other leading links, such as with measures of IO like NumOfWrites, SizeOnDiskBytes, NumOfBytesWritten and IOStallWriteMs – which curiously did not have strong links with similar measures like DatabaseID and FileID.

Strangely, the strongest links for the second mining structure (based on dm_os_wait_stats) were almost a mirror image, with DatabaseID being tightly linked to the same columns FileHandleID was in the first structure. I cannot speculate as to why DatabaseID from was weakly linked in the first but not the second, since it corresponds closely to FileHandleID from the same table, dm_io_virtual_file_stats, but this at least confirms that specific database files were closely correlated to certain measures of IO. This time, SchedulerAddress was correlated with IOPendingMSTicks, which in turn was strongly linked to CPUTicks, Minute and Hour; my first guess would be that some particular block of memory was causing IO stress at particular times, perhaps when the SSIS job was running. This would dovetail nicely with the high RAM consumption I witnessed during the data collection process, which led to spikes in IO rather than constant IO stress I expected, due to repetitive hits on the page files. IOCompletionRequestAddress was also significantly correlated with MaxWaitTimeMS.

All of this was gleaned from the Dependency Network, which provides no statistics. It has the advantage of being easy on the eyes, which makes it the ideal visualization tool to begin with when interpreting the results of many SSDM algorithms. If we’re using Decision Trees, we can dig down to greater levels of detail with the Microsoft Tree Viewer and the Generic Content Tree Viewer, both of which I’ve touched on in the last two posts. Linear Regression also uses the Tree Viewer but several of the controls within it are of no use, since that algorithm produces trees of just one node. When we’re dealing with Continuous data, the Mining Legend will appear like the example in Figure 2 to supply us with the regression equation, but that’s a topic we already discussed in the last tutorial. If we’re working with Continuous data in Decision Trees, we may see a node structure like the one below.

Figure 2: Some Microsoft Tree Viewer Output for the Second Mining Structure

The statistical importance of the attributes drops as you move from left to right in the tree structure, with the (All) node being most significant. The nodes can be expanded and collapsed with mouse clicks, while the Show Level and Default Expansion controls determine how many levels are displayed in each tree, which can be quite complicated in other projects. In this example, we’re checking out the attributes and values most likely to determine the value of SignalWaitTimeMs from the mining structure built on the dm_os_wait_stats view. You can also select the Background dropdown to highlight nodes in order of the coefficients of the input column you’ve selected, with the highest values shaded in blue and the lowest ranging from grey to white. The diamonds depict the node’s statistical variance, so that as BOL puts it, “A thinner diamond indicates that the node can create a more accurate prediction.” When you’re working with Discrete data, the nodes will be accompanied by histograms rather than diamond charts.

Figure 3: Microsoft Tree Viewer for the Third Mining Structure

Personally, I find the histograms for these Discrete attributes harder to read, since the colors contrast with each other and the Mining Legend cannot be ordered by the highest or lowest probabilities. For example, the highest value for LastExecutionTime is in yellow, but it corresponds to the fourth row in the Mining Legend despite having the highest probability. Figure 3 was typical with the results I received from Decision Trees when working with Discrete attributes. As you can see, the relationships SSDM decides to focus on are not always useful; like the ones for SchedulerAddress above, many of them are based on NOT values which only tell us that a particular column has a high probability of being any one of a wide range of other values. Many of the other trees returned for this mining structure and others I’ve practiced on in the past are also prone to cluttering with comparisons against Missing values, which in many circumstances may be a bogus comparison against attributes or values artificially eliminated through feature selection.

Chopping Down Decision Trees: Some Limitations of the Algorithm

Crafting mining structures to extract the most useful data out of Decision Trees may take a bit of practice for these reasons. Moreover, Decision Trees seems to be much more processor-intensive than the other algorithms we’ve touched on so far, in which memory and disk bottlenecks were more of a problem. For the first time in our trials, all six cores on my beat-up old development machine were maxed out for much of the processing phase. On the other hand, the results require a lot more disk storage space. In order to complete processing on the two larger mining structures I was forced to adjust two other parameters specific to Decision Trees, COMPLEXITY_PENALTY and MINIMUM_SUPPORT. Higher values for the former will limit the number of splits generated, thereby reducing resource consumption and preventing clutter with useful results, i.e. the classic signs of overfitting. When the number of attributes is between 1 and 9 the default is 0.5; between 10 and 99 attributes it is 0.9 and for more than 100 attributes, it is 0.99. For both of the two larger mining structures I had to set it at 0.999. When MINIMUM_SUPPORT is set to 1 or more the threshold of cases needed to create a split is set to an absolute number, but when it is less than 1, it represents a percentage of the total cases. I set it to 0.05 for the second and third mining structures. Decision Trees also has a parameter called SPLIT_METHOD which allows you to limit the way a tree branches out by allowing only binary splits that produce two nodes or complete splits where the branches generated can be equal to the number of attribute values; I left it at the default value to allow both for each mining model, since the issues I faced could not be solved by limiting it to binary splits. The SCORE_METHOD was left at 4 for both. It took 46 minutes using these parameter values to process the 7.6 million rows in the mining structure built on the dm_os_wait_stats view. I couldn’t finish processing the 3.9 million rows in the mining structure built on dm_exec_query_stats at all, thanks to the following error: “A string store or binary store with a compatibility level of 1050 is at the maximum file size of 4 gigabytes. To store additional strings, you can change the StringStoresCompatibilityLevel property of the associated dimension or distinct count measure to ‘1100’ and reprocess. This option is only available on databases with a compatibility level of ‘1100’ or higher. Physical file:. Logical file:.” SSAS 2012 has a new property, StringStoreCompatibility, which can prevent this file size limit from throwing a monkey wrench in cube processing, but Microsoft apparently forgot to implement it for mining structures. A user named furmangg opened a request for this feature last January at Microsoft Connect, but the moderator replied that it would only receive attention if a sufficient number of users requested it, but don’t hold your breath: only two other users have reported a need for it. It was almost as if Microsoft completely forgot about SSDM when implementing this simple but necessary upgrade for cubes.[xii] I tried a couple of workarounds for the problem that were used for cubes prior to 2012, such as by manually changing the forbidden SSAS parameter PropertyBufferRecordLimitEnabled in msmdsrv.ini to 1, which according to MSDN forum member Jeffrey Wang, “can reduce the maximum number of records inside a temporary processing buffer, hence indirectly limiting the number of strings added to the temporary string store associated with the temporary buffer. That may help when an attribute has a lot of directly related attributes which have string keys.”[xiii] This shortened the interval between the events Training Trees and Reading Cases in a Profiler trace until they were down to a ratio of about 3 or 4 to 1, so it definitely had an effect on processing, but it wasn’t sufficient to fix the problem. Strangely, Profiler revealed that processing consistently failed after the Training Model phase with a message that always included the wrong DatabaseName; I tried detaching all of the other databases from the server one by one, the DatabaseName was finally corrected but the same processing error occurred. Curiously, the Physical File and Logical File were left blank in the error messages and the size of the entire database folder did not approach four gigabytes during any point in processing, so I wonder if a metadata error of some kind was actually responsible for the error.

Rather than postponing this self-tutorial series yet again in order to invent a fix that doesn’t yet exist anywhere on the planet, I decided to use it to my advantage by changing my schema for the third mining structure. In the last three posts I’ve used the pretty much the same lineup of input and predictable columns that I set down in post 0.2, which meant that some reverse relationships between the columns that were always set as inputs rather than predictables might have been obscured. For example, some important Discrete attributes like PlanHandleID, QueryHashID, QueryTextID and SQLHandleID were consistently used to predict other attributes, particularly Continuous measures, so this time I swapped many of these columns so that the predictables were now inputs and vice versa. I also eliminated the total_clr_time, last_clr_time, min_clr_time and max_clr_time columns since I wasn’t using any .Net Common Language Runtime (CLR) functionality in my queries; I had left it in because I wasn’t sure if some other activity on the server was using it, but there were only two uses of it in all the millions of rows of exec data I collected. BOL suggests that performance can be improved if you “Restrict the number of discrete values for any attribute to 10 or less. You might try grouping values in different ways in different models.” I haven’t messed around with any brute force bucketing of Discrete values like this simply because I didn’t want to partition my data in ways that would make it less comparable over this series, plus I didn’t want to take the risk of obscuring links I might not otherwise know about. Because I overturned the inputs and predictables, the 18 minutes and 28 seconds it took to process this reversed version of the dm_exec_query_stats structure can’t really be compared against the times for the same structure when we run other algorithms. Nevertheless, it not only completed without the nasty string store error but provided some useful insight into the data. Among these was a strong relationship in the Dependency Network between the PerformanceCounterDBID and three other measures, CntrValue, SQLHandleID and MinuteGap. This might suggest that a change in a particular performance counter was associated with a specific query and might even be related to the mysterious MinuteGap problem.

To retrieve more specific information about relationships like this we can of course make use of direct DMX queries, but as usual, we will postpone that advanced topic until the discussion of the nine algorithms is complete. The next best thing is to access the Generic Content Tree Viewer, which displays the data used to build the fancy visualizations in Microsoft Tree Viewer. As discussed in past posts, the Data Mining team at Microsoft deserves a lot of credit for developing a common metadata format that hold the disparate output of all nine algorithms, which is a bit like making a bucket that can hold both apples and oranges. One of the prices to be paid for that, however, is that the meaning of the columns in that format may change in subtle ways from one algorithm to the next. In fact, the format used by Decision Trees differs within itself depending on whether a particular node refers to a classification or regression tree, which is exactly like comparing apples and oranges. I developed the handy chart below or interpreting the results returned by Decision Trees, based on the information supplied in BOL;

Figure 4 Metadata for Decision Trees (adapted from Books Online)

Another prices to be paid for the common data format is that we must deal with two levels of denormalized nested tables, which can cause confusion for those coming from a relational background. The output of each column in Decision Trees varies by the type of node, which can be one of five different values. The NODE_DISTRIBUTION column is also actually a nested table, whose columns different in meaning depending on their VALUE_TYPE. As usual, ATTRIBUTE_NAME is the identifier of the predictable column and SUPPORT is the count of cases for that node, while PROBABILITY is calculated as described in Figure 4. Like ATTRIBUTE_VALUE and VARIANCE, the latter is completely dependent on the VALUE_TYPE. If the attribute described in a particular row is Continuous, the same VALUE_TYPES and corresponding interpretations of the other columns will follow the rules mentioned in A Rickety Stairway to SQL Server Data Mining, Algorithm 2: Linear Regression. This is to be expected, since Decision Trees is essentially applying the Linear Regression mining method in these cases. When the VALUE_TYPE is 4 or 5 for Discrete or Discretized columns respectively, the algorithm is creating classification trees instead of calculating regressions. In these instances each ATTRIBUTE_NAME and ATTRIBUTE_VALUE pair represents a unique combination of the predictable attribute and one of its distinct values, together with a PROBABILITY value, which should sum to 1 for all of the rows depicted. VARIANCE will always be nil with Discrete columns. All of this adds a little complexity, so that you have to mine the mining results themselves, so to speak. Yet there are some advantages to visualization though when using the Generic Content Viewer with Decision Trees, since it allows you to do something the Microsoft Tree Viewer does not: see all of the separate tree nodes for each predictable attribute in one view. Thanks to the intrinsic problem of returning results that aren’t cluttered with Missing and NOT values, which seems to be the real Achilles Heel of Decision Trees, I wasn’t able to dig out much extra value from the Generic Content Tree Viewer. It merely displays the same information – or lack thereof – we see in the Microsoft Tree Viewer, rather than displaying extra trees that we wouldn’t otherwise get to see. The combination of Discrete and Continuous attributes in a single algorithm illuminated some relationships we might not have able to see otherwise, such as those for MinuteGap, IOPendingMSTicks and SQLHandleID. In our next step up the rickety stairway, we will try to shine light on our data from a different direction by using a distantly related algorithm, Logistic Regression, which also has the capability of handling both Discrete and Continuous attribute types

[ii] The relevant papers seem to be Quinlan, John Ross, 1979, “Discovering Rules from Large Collections of Examples: A Case Study,” pp. 168-201 in Michie, D., ed., Expert Systems in the Micro-Electronic Age. Edinburgh University Press: Edinburgh, Scotland; Quinlan, John Ross, 1979, Technical Report HPP-79-14: Induction Over Large Databases. Heuristic Programming Project of Stanford University: California; Quinlan, John Ross, 1986, Induction of Decision Trees, pp. 81-106 in Machine Learning, Vol. 1, No.1

[iii] Quinlan, John Ross, 1993, C4.5: Programs for Machine Learning. Morgan Kaufmann: San Mateo, California.

[iv] Breiman, Leo; Friedman, Jerome H.; Olshen, Richard A. and Stone, Charles J., 1984, Classification and Regression Trees. Wadsworth International Group: Belmont, California. For more information on the history of the algorithm, see Berson, Alex; Smith, Stephen; Thearling, Kurt, 2000, An Overview of Data Mining Techniques. McGraw-Hill: New York. Excerpts cited at a webpage by the same name at http://www.thearling.com/text/dmtechniques/dmtechniques.htm. Frans Coenen, Franz, 2011, Data Mining: Past, Present and Future,” pp 25-29 in The Knowledge Engineering Review, Vol. 26, Special Issue 1, February 2011 (see http://journals.cambridge.org/action//displayFulltext?type=1&pdftype=1&fid=8038648&jid=KER&volumeId=26&issueId=01&aid=8038646 ); Stefanowski, Jerzy, 2010, “Discovering Decision Trees,”part of the course material for the Lecture 5 SE Master Course at the Poznań University of Technology’s Institute of Computing Science. Available at http://www.cs.put.poznan.pl/jstefanowski/sed/DM-5-newtrees.pdf

[v] p. 137, Schultz.

[vi] See Stefanowski’s paper for a good summation of this. Also refer to the Wikipedia article “Decision Tree Learning” at http://en.wikipedia.org/wiki/Decision_tree_learning

[vii] See Stefanowski again.

[viii] For a readable introduction to Shannon’s Entropy, see pp. 51-58, Ueltschi, Daniel, “Shannon’s Entropy,” cited at http://www.ueltschi.org/teaching/chapShannon.pdf

[ix] The human race has barely scratched the surface of the amazing things data mining can do, but the sky is not the limit.

[x] Heckerman, David.; Geiger, Dan and Chickering, David M., 1995, “Learning Bayesian Networks: The Combination of Knowledge and Statistical Data,” pp. 197-243 in Machine Learning No. 20. Available at http://research.microsoft.com/apps/pubs/default.aspx?id=65088Since SSDM depends a lot on the research in this paper, I read it all the way through, although I was only able to grasp parts of it.[xi] For kicks, I decided to see what would happen if we set the FORCE_REGRESSOR parameter on single Continuous input column, so that it actually performs a regression, against all Discrete outputs. SSDM apparently ignored it. If you set the flag on a Discrete column an error is returned.

[xii] It is just one more thread in a pattern of inattention by the corporate brass to SSDM, which is still by far the best data mining tool offered by any of the big software firms despite being ignored for the last four years or more. I guess we can answer the classic solipsist dilemma definitively and say that if a Decision Tree falls in a forest, and Microsoft is no longer listening, it does indeed fall.

[xiii] See the MSDN webpage “Denali SSAS StringStoreCompatibility level 1100 is not working for Denali CTP3” at

http://social.msdn.microsoft.com/Forums/nl-BE/sqlanalysisservices/thread/45467999-d7ca-4b45-930f-d03cc879d49c; the MSDN webpage “Configure String Storage for Dimensions and Partitions”

http://msdn.microsoft.com/en-us/library/gg471589.aspx; and the Microsoft Connect webpage “Allow StringStoresCompatibilityLevel to Apply to Mining Structures” by the user named Furmangg at http://connect.microsoft.com/SQLServer/feedback/details/721171/allow-stringstorescompatibilitylevel-to-apply-to-mining-structures. For the forbidden parameter, see the MSDN page “Way to Get Beyond 4GB String Store Limit?” started by Furmangg at http://social.msdn.microsoft.com/forums/en-US/sqlanalysisservices/thread/973f4d41-b9d9-4c2b-96d2-876ec1679f97/

Posted on January 16, 2013, in A Rickety Stairway to SQL Server Data Mining and tagged Algorithm, Analysis Services, Analytics, Data Mining, Decision Trees, Knowledge Discovery, SQL Server, SQL Server Data Mining, SSDM, Stevan Bolton, Steve Bolton. Bookmark the permalink. Leave a comment.

Leave a comment

Comments 0