Blog Archives

A Rickety Stairway to SQL Server Data Mining, Part 14.6: Custom Mining Functions

by Steve Bolton

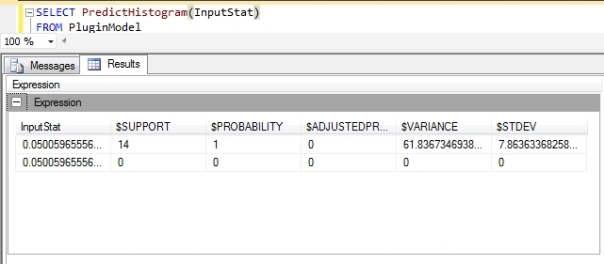

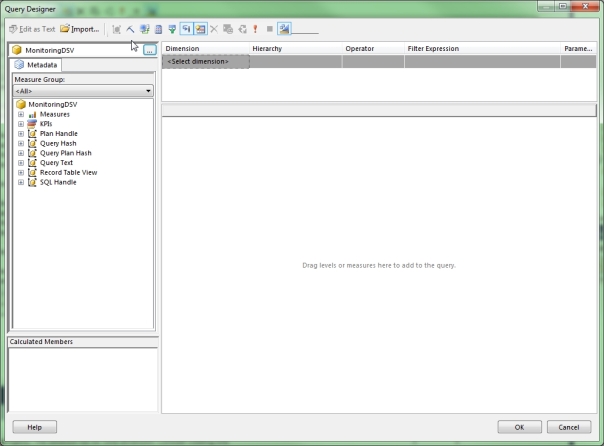

…………In the last installment of this amateur series of mistutorials on SQL Server Data Mining (SSDM), I explained how to implement the Predict method, which controls how the various out-of-the-box prediction functions included with SSDM are populated in custom algorithms. This limits users to returning the same standard columns returned by calls to prediction functions against the nine algorithms that ship with SSDM. The PredictHistogram function, for example, will return a single prediction column, plus columns labeled $Support, $Probability, $AdjustedProbability, $Variance and $StDev, just as it does with the out-of-the-box algorithms. Although we can control the output of the standard prediction functions to return any values we want, it may be more suitable in some cases to specify our own output format with more flexibility. This is where the ability to define your own custom mining functions comes in handy. Another important use case is when you need to perform custom calculations that wouldn’t normally be implemented in standard prediction functions like PredictStDev or PredictSupport. Plug-in programmers could conceivably perform any complicated calculation they like in the Predict method and return it in the results for PredictVariance, but this would be awkward and illogical if the result is not a measure of variance. Thankfully, the SSDM plug-in architecture we’ve been discussing in the past articles includes a mechanism for defining your own custom mining functions, which addresses all of these needs.

…………Aside from the calculation of cumulative statistics, developing a custom mining function is actually quite simple. The development process begins by declaring a public function in your AlgorithmBase class ordained with a MiningAlgorithmClassAttribute, which looks like the attribute declaration in my sample code: <MiningFunction(“My Custom Mining Function Description”)>. The text within the quotation marks will appear in the DESCRIPTION column of the DMSCHEMA_MINING_FUNCTIONS schema rowset, for the row corresponding to the new function. The parameter list can contain variables of your choosing, like the RecordCount integer I use in my sample code for limiting the rows returned by MyCustomMiningFunction, but special rules apply in a few cases. Parameters decorated with the MiningTableColumnRefAttribute or MiningScalarColumnRefAttribute tags must be AttributeGroup objects, such as the <MiningScalarColumnRefAttribute()> TempAttributeGroup AsAttributeGroup declaration in my sample code. I believe MiningTableColumnRefAttribute tags are used for feeding nested tables to custom mining functions, but the PluginModel mining model we’ve been working with in this segment on custom mining algorithms doesn’t have one, nor do I see any benefit in adding one because of the risk of cluttering the tutorial needlessly. MiningScalarColumnRefAttribute, however, is a more common tag that allows you to pass a reference to a specific model column; in the prediction I’ll execute to test the function, I’ll pass a value of “InputStat” to identify the first column in the PluginModel. The Flag attribute can also be used to identify optional flags passed as parameters to the function, as I do in the parameter declaration <Flag()> INCLUDE_COUNT AsBoolean. Apparently, these flags must be Booleans, because I received the following warning in the FUNCTION_SIGNATURE column of DMSCHEMA_MINING_FUNCTIONS whenever I use other data types with a flag I called ATTRIBUTE_NUMBER that I later removed: “Invalid Flag type (must be Boolean) in method MyCustomMiningFunction:ATTRIBUTE_NUMBER.” The algorithm will still deploy correctly if these parameters are set wrong but will return error messages when prediction queries are executed against the custom functions. The parameter list may also include a MiningCase object, which is not exposed to end users and causes SSDM to automatically iterate over the case set supplied in a prediction join, OpenQuery, or other such statement. As we shall see, one of the primary challenges with custom mining functions is to calculate cumulative statistics by iterating over this MiningCase object.

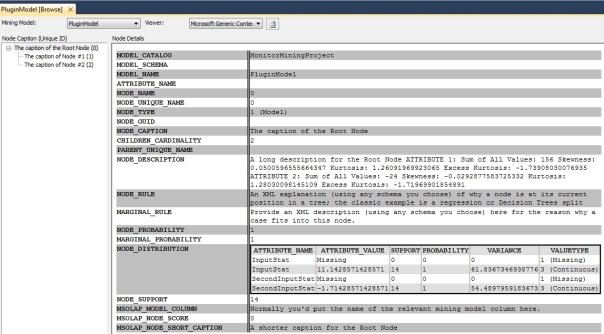

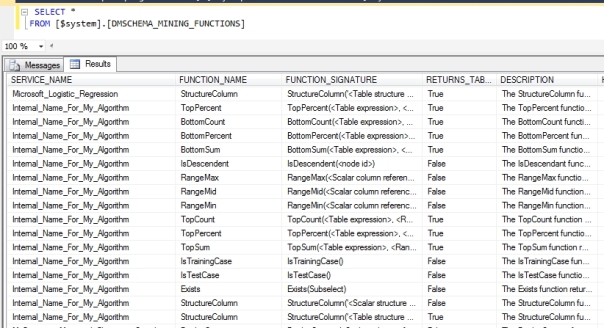

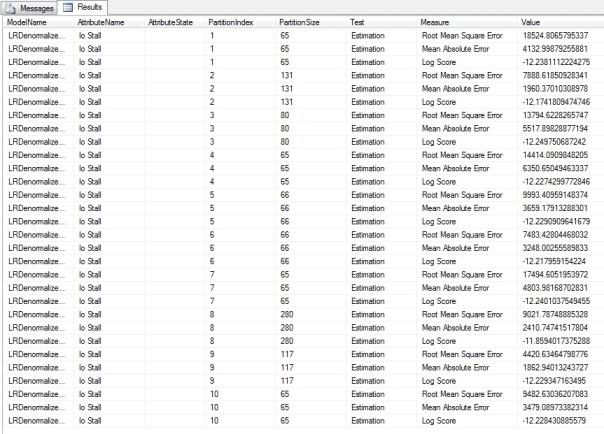

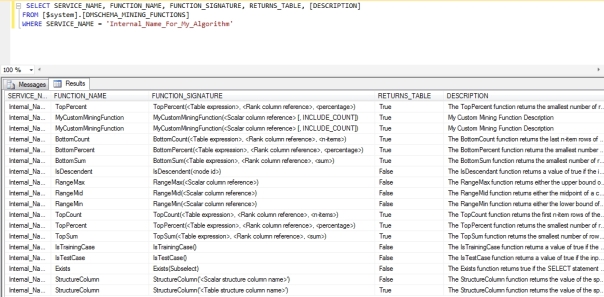

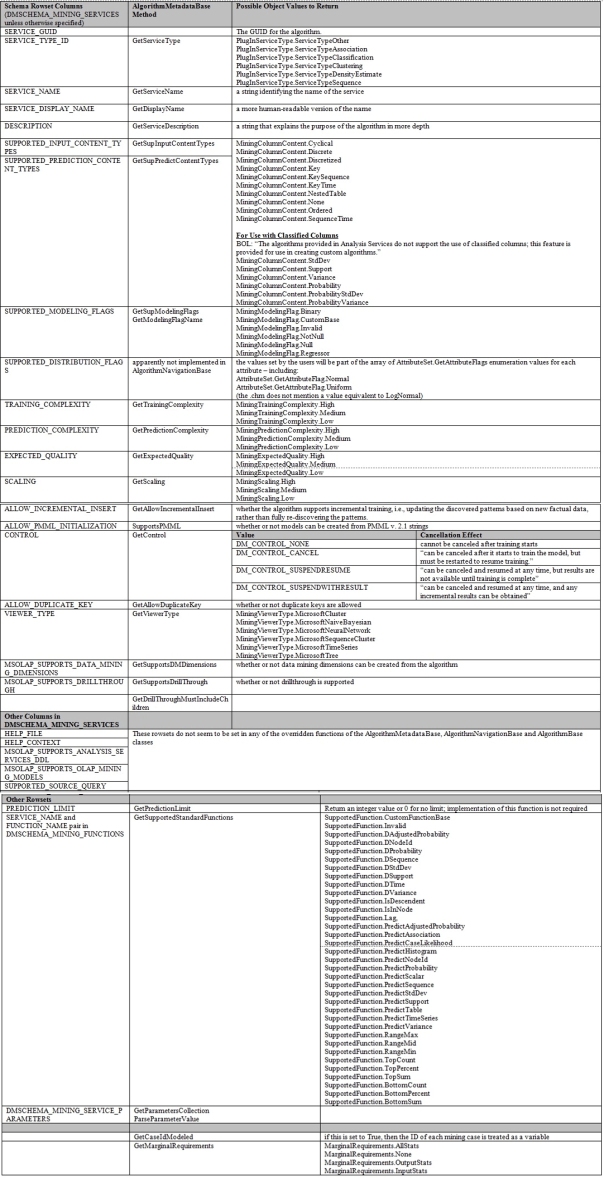

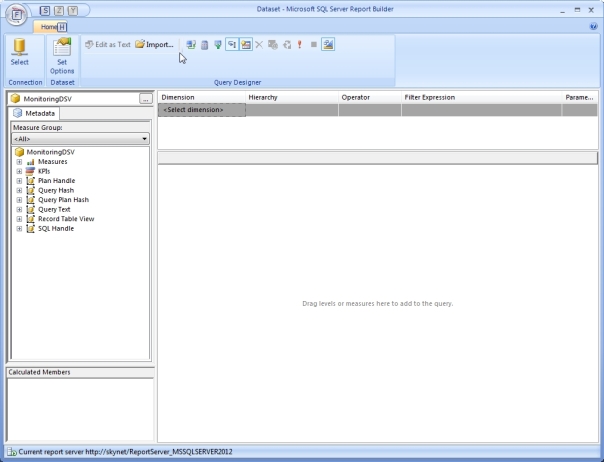

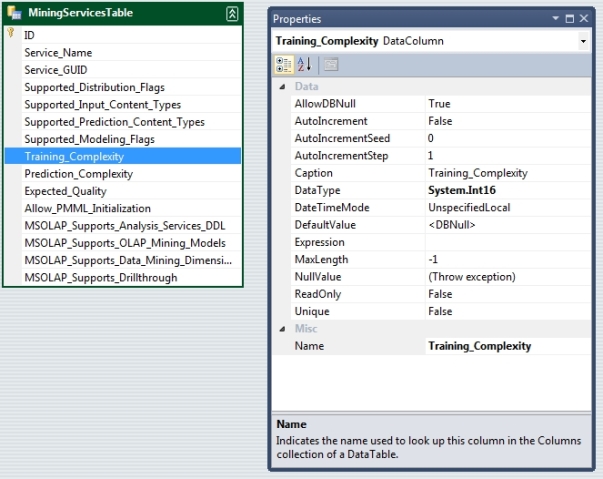

…………You can basically return values you want from your function, but if they’re scalar, they must be declared as System.Object. To return more than one value your only choice is to declare the return type as System.Data.DataTable. If your revised AlgorithmBase code compiles and deploys correctly, then the next time the service starts you should see your choice reflected in the RETURNS_TABLE column of DMSCHEMA_MINING_FUNCTIONS. Note how the query in Figure 1 reflects the name of the method we added to AlgorithmBase in the FUNCTION_Name column, the string from the MiningAlgorithmClassAttribute in the DESCRIPTION and the lists of parameters in FUNCTION_SIGNATURE. Some other standard functions enabled for our sample plug-in are also listed in the results (minus the prediction functions I enabled for the last tutorial, which were removed to shorten the list).

Figure 1: The Custom Mining Function Depicted in DMSCHEMA_MINING_FUNCTIONS

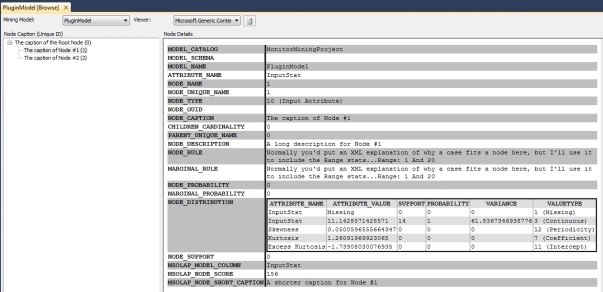

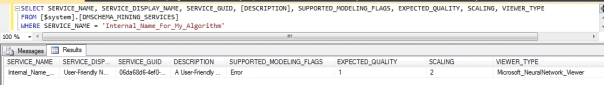

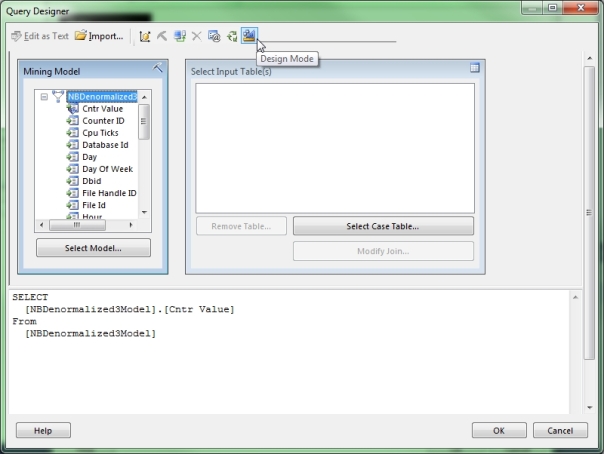

…………Note that the MyCustomMiningFunction is listed twice; this is because it is declared in AlgorithmBase and in SupportedFunction.CustomFunction Base, as part of the array returned by AlgorithmMetadataBase. Removing the function from AlgorithmBase without removing it from SupportedFunction.CustomFunction Base from the array returned by AlgorithmMetadataBase.GetSupportedStandardFunctions led to this error on msmdsrv, when the algorithm failed to initialize: “The data mining algorithm provider (ProgID: 06da68d6-4ef0-4cea-b4dd-1a7c62801ed2) for the Internal_Name_For_My_Algorithm algorithm cannot be loaded. COM error: COM error: DMPluginWrapper; Parameter name: in_FunctionOrdinal Actual value was 10000.” Another “gotcha” you may encounter is the warning that “PREDICTION JOIN is not supported” for a particular mining model when you try to use the function in a query. After many comparisons to the original sample code provided in the plug-in software development kit (SDK) by Bogdan Crivat, one of the original developers of SSDM, I was able to narrow this down to the cases in which the GetCaseIdModeled method of AlgorithmMetadataBase returns True. Simply changing the return value for that method ought to fix the problem. Another common mistake you may encounter while testing custom mining functions is leaving off the ON clause in the PREDICTION JOIN, which may result in this warnng: “Parser: The end of the input was reached.” As renowned SQL Server Analysis Services (SSAS) guru Chris Webb says in his helpful post Error messages in MDX SELECT statements and what they mean, this can also be due to missing parentheses, brackets and semi-colon marks. All of these myriad issues were fairly inconsequential in comparison to those that arose when I debugged the AlgorithmNavigationBase class in A Rickety Stairway to SQL Server Data Mining, Part 14.4: Node Navigation, which is far easier than troubleshooting AlgorithmBase and less difficult by several orders of magnitude than dealing with exceptions in AlgorithmMetadataBase. The biggest challenge I encountered while writing the sample code depicted in Figure 2 was calculating cumulative values across iterations over the MiningCase object, which were problematic to persist.

Figure 2: Sample Code for MyCustomMiningFunction

<MiningFunction(“My Custom Mining Function Description”)>

Public Function MyCustomMiningFunction(InputCase As MiningCase, RecordCount As Integer, <MiningScalarColumnRefAttribute()> TempAttributeGroup As AttributeGroup, <Flag()> INCLUDE_COUNT As Boolean) As System.Data.DataTable

Static CaseCount As UInteger = 0 ‘the 0th case will be the Prepare statement

‘for whatever reason, the first InputCase is always Nothing

‘we can use that opportunity to set up the data table one time only

If IsNothing(InputCase) = True Then

MyCustomMiningFunctionDataTable = New System.Data.DataTable ‘on the first iteration, reset the datatable

MyCustomMiningFunctionDataTable.Columns.Add(“Skewness”, GetType(Double))

MyCustomMiningFunctionDataTable.Columns.Add(“Kurtosis”, GetType(Double))

MyCustomMiningFunctionDataTable.Columns.Add(“ExcessKurtosis“, GetType(Double))

MyCustomMiningFunctionDataTable.Columns.Add(“JBStatistic“, GetType(Double))

If INCLUDE_COUNT = True Then

MyCustomMiningFunctionDataTable.Columns.Add(“TotalRows“, GetType(UInteger))

End If

‘once the data type is set, we can return it one time, in the event of a Prepare statement

‘I will have to double-check later and make sure this statement doesn’t need to execute for each row during Prepared statements

‘Bogdan: “•If the execution is intended for a Prepare statement, it returns an empty string. A Prepare execution must return data that has the same type and schema (in case of tables) with the real result.”

‘so if it is only for a prepare statement, we’d send it back the empty data table?

‘chm: on Context.ExecuteForPrepare: “Determines whether the current query is preparing the execution or it is fully executing. Should only be used in the implementation of custom functions”

If Context.ExecuteForPrepare = True Then

Return MyCustomMiningFunctionDataTable

End If

Else

‘dealing with actual cases; add them to the cumulatives so we

can perform calculations at the end

‘for now we will deal with a single attribute for the sake of simplicity; it would be fairly easy though to extend this to deal with more than one attribute at a time

Try

If CaseCount < RecordCount – 1 Then ‘RecordCount is 1-based, CaseCount is not

‘both variables are 0-based

If CaseCount = 0 Then

ReDim TempSkewnessKurtosisClassArray(0) ‘initialize the arrays one time; this won’t work during the Preparation phase when InputCase = 0, because other variables will be set to 1

ReDim TempDataPointArray(0, RecordCount – 1)

TempSkewnessKurtosisClassArray(0) = New SkewnessKurtosisClass

End If

TempDataPointArray(0, CaseCount) = New DataPointClass

TempDataPointArray(0, CaseCount).DataPoint = InputCase.DoubleValue

TempSkewnessKurtosisClassArray(0).TotalValue = TempSkewnessKurtosisClassArray(0).TotalValue + InputCase.DoubleValue

CaseCount = CaseCount + 1

Else

‘add the last set

If IsNothing(TempSkewnessKurtosisClassArray(0)) Then TempSkewnessKurtosisClassArray(0) = New SkewnessKurtosisClass

TempDataPointArray(0, CaseCount) = New DataPointClass

TempDataPointArray(0, CaseCount).DataPoint = InputCase.DoubleValue

TempSkewnessKurtosisClassArray(0).TotalValue = TempSkewnessKurtosisClassArray(0).TotalValue + InputCase.DoubleValue

CaseCount = CaseCount + 1

‘if we’re on the final case, calculate all of the stats

Dim TempRow As System.Data.DataRow

TempRow = MyCustomMiningFunctionDataTable.NewRow()

TempSkewnessKurtosisClassArray(0).Mean

= SkewnessKurtosisClassArray(0).CalculateAverage(RecordCount, TempSkewnessKurtosisClassArray(0).TotalValue)

TempSkewnessKurtosisClassArray(0).StandardDeviation = SkewnessKurtosisClassArray(0).CalculateAverage(RecordCount, TempSkewnessKurtosisClassArray(0).Mean)

TempRow.Item(“Skewness”) = TempSkewnessKurtosisClassArray(0).CalculateSkewness(TempDataPointArray, 0, TempSkewnessKurtosisClassArray(0).Mean, TempSkewnessKurtosisClassArray(0).StandardDeviation)

TempRow.Item(“Kurtosis”) = TempSkewnessKurtosisClassArray(0).CalculateKurtosis(TempDataPointArray, 0, TempSkewnessKurtosisClassArray(0).Mean, TempSkewnessKurtosisClassArray(0).StandardDeviation)

TempRow.Item(“ExcessKurtosis“) = TempSkewnessKurtosisClassArray(0).CalculateExcessKurtosis(TempSkewnessKurtosisClassArray(0).Kurtosis)

TempRow.Item(“JBStatistic“) = TempSkewnessKurtosisClassArray(0).CalculateJarqueBeraTest(RecordCount, TempSkewnessKurtosisClassArray(0).Skewness, TempSkewnessKurtosisClassArray(0).ExcessKurtosis)

If INCLUDE_COUNT = True Then

TempRow.Item(“TotalRows“) = RecordCount

End If

MyCustomMiningFunctionDataTable.Rows.Add(TempRow) ‘add the row

CaseCount = 0 ‘reset the counter

Array.Clear(TempSkewnessKurtosisClassArray, 0, TempSkewnessKurtosisClassArray.Length) ‘clear the arrays so that there is no overhead for using a class-scoped variable

Array.Clear(TempDataPointArray, 0, TempDataPointArray.Length)

Return MyCustomMiningFunctionDataTable

‘then reset the counters so that the function starts off fresh the next time it is called

‘any code I set after this to reset the DataTable won’t be hit – so hopefully SSDM does garbage collection on its own; the best I can do is reinitialize the values in the event of an error

Exit Function ‘so we don’t Return it again

End If

Catch ex As Exception

‘if there’s an exception the static and class-scoped variables for this function must be reset, otherwise they will interfere with the next execution

CaseCount = 0 ‘reset the counter

Array.Clear(TempSkewnessKurtosisClassArray,0, TempSkewnessKurtosisClassArray.Length) ‘clear the arrays so that there is no overhead for using a class-scoped variable

Array.Clear(TempDataPointArray, 0, TempDataPointArray.Length)

MyCustomMiningFunctionDataTable.Rows.Clear() ‘clear all the rows

MyCustomMiningFunctionDataTable = Nothing

End Try

End If

Return MyCustomMiningFunctionDataTable

End Function

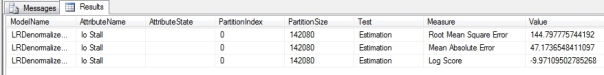

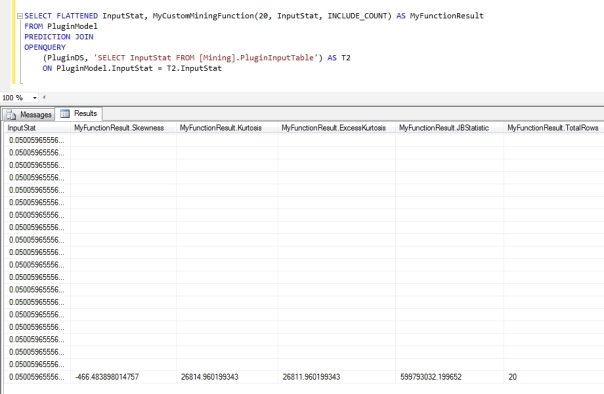

…………Since there are already a handful of better tutorials out there that demonstrate how to write a custom mining function (most notably Crivat’s sample code in the SDK) I wanted to make a contribution of sorts by pushing the boundaries of the architecture. Since the very basic mining method I developed in A Rickety Stairway to SQL Server Data Mining, Part 14.2: Writing a Bare Bones Plugin Algorithm merely returned some basic global stats for each column that I wanted to learn more about, like skewness, kurtosis and excess kurtosis, I figured it would be interesting to calculate the same figures for the values supplied in a prediction join. For good measure, I added a method to the SkewnessKurtosisClass I used in Post 14.2 to perform Jarque-Bera tests, which use measures of lopsidedness like skewness and kurtosis to determine how well datasets fit a normal distribution. I used the formula available at the Wikipedia page Jarque-Bera Test, which is fairly easy to follow in comparison to more complicated goodness-of-fit tests. I soon discovered, however, that keeping track of the cumulative stats required to calculate these measures is problematic when using custom mining functions. SQL Server will make numerous calls to your function, depending on how many input cases there are, but there isn’t a straightforward solution to keeping track of values across these calls. As a result, I had to create two class-scoped variable arrays that mirrored the AlgorithmBase’s SkewnessKurtosisClassArray and DataPointArray variables, which I explained how to use in Post 14.2. The static CaseCount variable I declared at the beginning of the function is used in conditional logic throughout the outer Else…End If statement to control the population of the TempDataPointArray. The DataPointClass objects that accumulate in this array with each iteration of the function are fed en masse to the routines that calculate skewness, kurtosis, excess kurtosis and the Jarque-Bera test when the CaseCount reaches the final input case. On this final pass, those four stats are also added to the single row that comprises the data table the function returns. This MyCustomMiningFunctionDataTable variable is defined as an instance of System.Data.DataTable in the outer If…Else block, which will only be triggered when InputCase has a value of Nothing. This condition is only met on the first pass through the function, which is apparently designed solely to provide a means of declaring the return variable. The Context.ExecuteForPrepare condition is only true on this first pass. Special exception-handling logic is required to reset the values of the class-scoped variables and the static CaseCount in the event of an error, which is comprised by the Catch…End Try block at the end of the method. The last five lines in the End If immediately above the Catch…End Try block perform pretty much the same function, but only after the final pass executes successfully. If these two blocks of code were not included, the CaseCount would continue to accumulate and the calculations of the TempDataPointArray and TempSkewnessKurtosisClassArray would at best throw exceptions, or worse yet, incorrect results.

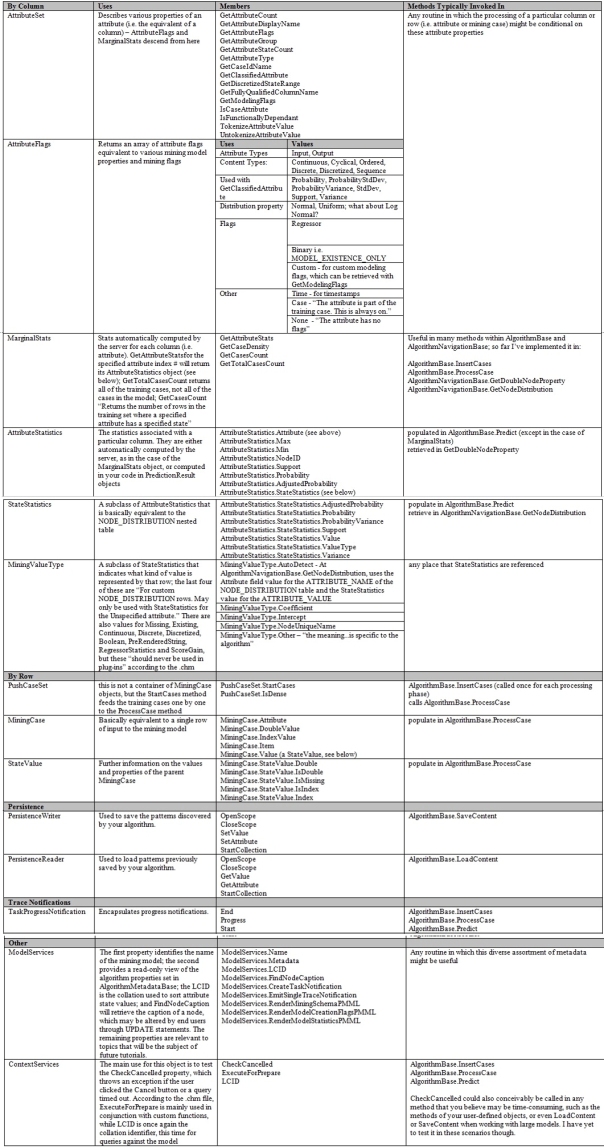

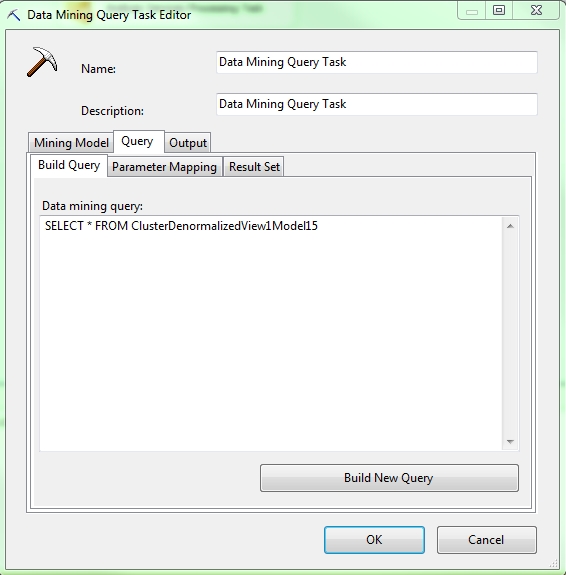

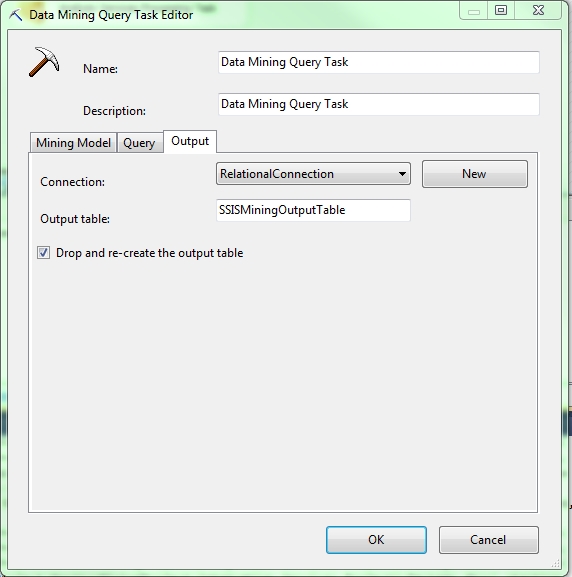

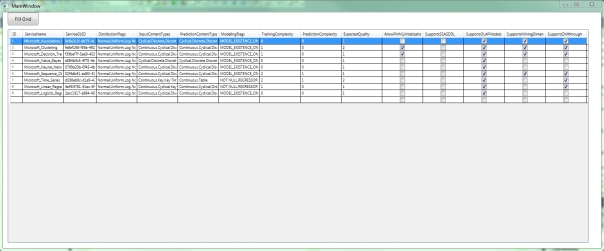

…………Ideally, it would be easier to retrieve the count of input rows from the function itself or some variable exposed by SSDM, or at least find a way to determine when the function has exited, but thus far I have not yet found such a means. One alternative I tried was to use a CaseSet as an input parameter, but the Description column of the DMSCHEMA_MINING_FUNCTIONS rowset returned an “Invalid parameter type” error. Defining InputCase as an array of MiningCase objects will produce a different error when you run a Data Mining Extensions (DMX) query, including the text, “Too many arguments or flags were supplied for the function.” Nor can you return multiple rows, one at a time; this is why there are three Return statements in the function, one for the initial ExecuteForPrepare phase, one for returning all of the data en masse one time only in a single table, and another for cases in which an empty table is returned because there are no results to report. Returning a value of Nothing instead of the return type declared in the ExecuteForPrepare phase will raise an error, whereas returning an empty table with no rows is also undesirable, since it adds an extra line to the output. If you return a null value instead of the declared return type, you will also receive an error in SQL Server Management Studio (SSMS) worded like this: “COM error: COM error: DMPluginWrapper; Custom Function MyCustomMiningFunction should return a table, but it returned NULL.” There may still be errors lurking in the conditional logic I implemented in this function, but it at least amounts to the crude beginnings of a workaround of the problem of calculating stats dependent on cumulative values in custom mining functions. Would-be plug-in developers are basically stuck with iterating over the input cases one row at a time, rather than operating on an entire dataset, so some type of workaround involving static or class-scoped variables may be necessary when calculating cumulatives. Displaying the results with this type of hack is also problematic, as Figure 3 demonstrates. It is necessary to add another column to the results, as the first reference to “InputStat” does, otherwise FLATTENED results will only display a single blank row, but this means each row in the input set (taken from an OpenQuery on the PluginModel’s data source) will have a corresponding row in the results. In this case, each of the 20 input cases in our dataset is represented by the skewness value calculated for the training set of the InputStat column. It is possible to omit this superfluous column by removing the FLATTENED statement, but then it returns one empty nested table for each row in the input set. Either way, the only row we’re interested in is the last one, which depicts the skewness, kurtosis, excess kurtosis and Jarque-Bera statistic for the entire dataset. The TotalRows column is also populated with the value the user input for RecordCount when the INCLUDE_COUNT flag is specified; this takes just six lines of code in two separate section of Figure 3, once to add the column to the return table and another to assign the value. To date, this is still impractical because I haven’t found a way to use a TOP, ORDER BY, sub-select other clause to return only the row with the cumulative statistics we calculated, but my ugly hack at least shows that cumulatives can be calculated with custom mining functions. That’s not something that’s ordinarily done, but this comparatively short topic seemed an ideal place to contribute a little bleeding edge experimentation. The “Rickety” disclaimer I affixed to this whole series is sometime apt, because I’m learning as I go; the only reason I’m fit to write amateur tutorials of this kind is that there so little information out there on SSDM, which is an underrated feature of SQL Server that is badly in need of free press of any kind. In the next installment of this series, we’ll delve into other advanced plug-in functionality, like custom mining flags, algorithm parameters and feature selection.

Figure 3: Sample Query Result for the Custom Mining Function (With the Cumulatives Hack)

A Rickety Stairway to SQL Server Data Mining, Part 14.5: The Predict Method

By Steve Bolton

…………In order to divide the Herculean task of describing custom algorithms into bite-sized chunks, I omitted discussion of some plug-in functionality from previous installments of this series of amateur tutorials on SQL Server Data Mining (SSDM). This week’s installment ought to be easily digestible, since it deals with a single method in the AlgorithmBase class that is relatively easy to implement – at least in comparison to the material covered in the last four articles, which is fairly thick. For primers on such indispensable topics as the benefits of the plug-in architecture, the object model, the arrangement of nodes in the mining results and debugging and deployment of custom mining methods, see those previous posts. The Predict method is marked Must Override, which signifies that it must be implemented in order for any SSDM plug-in to compile and run successfully, but it is possible to leave the code blank as we did in these previous tutorials in order to get the bare bones plug-in to return some simple results. Until it is fleshed out, the plug-in sample code we’ve been working with in recent weeks won’t return any results for prediction queries.

…………As I mentioned in those previous posts, I’m writing the sample plug-in code in Visual Basic .Net, in part because there’s a distinct lack of SSDM code written in VB on the Internet, and secondly because it’s my favorite language. I am much more familiar with it than C#, which is what most of the available plug-in tutorials and sample code are written in. Like most of the code I’ve posted so far in this segment of the Rickety series, my implementation of the Predict method originally came from the samples provided by Bogdan Crivat (one of the founding SSDM programmers) in the plug-in software development kit (SDK). After converting to VB using online tools, changing the variable names to my liking, adding implementations of some objects found in the SDK help file and otherwise reworking it, the code is now practically unrecognizable. The design pattern and logical flow are pretty much the same, however, and would not have worked at all without Crivat’s indispensable sample code to guide me. My goal is merely to describe the basic tasks any plug-in programmer typically has to perform in order to return the simplest of results from prediction queries, which is a fairly straightforward task. That does not mean, however, that the Predict method cannot be insanely complex when a particular algorithm requires really advanced functionality; like most of the other plug-in class methods we’ve covered so far, there is plenty of room to add intricate conditional logic when the situation calls for it. An introductory tutorial like this is not one of those situations, because of the risk of clutter, so I’ll just to stick to the basics of what can or must be done in the Predict method. I’ll leave fancier implementations of that functionality up to the imaginations of intrepid plug-in programmers.

…………Most of the plug-in methods we’ve deal with so far are commonly linked to a couple of classes in the object model, but the relationship between the Predict method and the PredictionResult method is even tighter than in many of these cases. SSDM automatically populates the method with MiningCase objects, which we worked with back in A Rickety Stairway to SQL Server Data Mining, Part 14.2: Writing a Bare Bones Plugin Algorithm, plus a single PredictionResult that reflects the structure of the prediction query specified by the end user. For example, the class has a MaxPredictions member that is equivalent to the n parameter of the Predict function, to limit the number of records returned; an IncludeNodeID member to return a string value for the NODE_ID when the user specifies the INCLUDE_NODE_ID flag in the query; and AttributeSortBy and AttributeSortDirection members that describe the arrangement of columns specified in the ORDER BY clause. If the user also used the INCLUDE_STATISTICS flag, then SSDM will also return a NODE_DISTRIBUTION table with the results that is limited to the number of predictions set in the MaxStates property. Any order assigned to this dependent table will likewise be reflected in the StateSortBy and StateSortDirection properties. StateSortBy and AttributeSortBy both take values of the SortBy enumeration, which includes values like SortBy.AdjustedProbability, SortBy.Probability, SortBy.Support, SortBy.Variance and SortBy.None, while the other two properties take either SortDirection.Ascending or SortDirection.Descending values. The good news is that we don’t have to worry about any of these sorting properties, because as Crivat says in the SDK tutorial, “…you do not need to take them into account during the prediction, except if your patterns layout allows you to dramatically optimize the performance. The infrastructure will push the attribute predictions in a heap structure, sorted by probability, support, or adjusted probability as requested in the prediction operation.” In other words, it’s an advanced lesson far beyond the scope of this crude tutorial series. I imagine that in such a scenario, you would add conditional logic to alter the results returned by the query, depending on which sort method was in use. For example, you might implement a Select Case like the one in Figure 1, which could also be performed on a PredictionResult.StateSortBy value:

Figure 1: Sample Conditional Logic for a SortBy Value

Select

Case PredictionResult.SortBy.AdjustedProbability

Case PredictionResult.SortBy.Probability

Case PredictionResult.SortBy.Support

Case PredictionResult.SortBy.Variance

Case PredictionResult.SortBy.None

End Select

…………That begs the question: what belongs in each Case clause? The answer might include any of the remaining operations we’ll perform with PredictionResult in the rest of this tutorial, many of which could be encapsulated in one of these Case statements. A typical implementation of the Predict method, would begin a While loop like the one in Figure 2, which iterates over the OutputAttributes collection of the supplied PredictionResult. The PredictionCounter variable also limits the loop by the value of the MaxPredictions property and is incremented with each iteration just before the End While, which occurs near the end of the function. The AttributeSet.Unspecified variable supplies the index of the currently selected output attribute in the AttributeSet collection; it is zero-based, so we won’t be subtracting one from some of the arrays we’ll deal with in the meat and potatoes of the function, unlike in some other methods we’ve dealt with in the past. I normally prefer to iterate over explicit arrays, but had to use a While loop in this case because AttributeGroup objects like the OutputAttributes member have only two commands to control iteration, Next and Reset. The Select Case that follows the While declaration merely determines whether or not the currently selected attribute is disallowed by the PredictionResult.InputAttributesTreatment property. If not, then we create an AttributeStatistics object to hold the results we’ll be returning.

Figure 2: Complete Sample Code for the Predict Method

Protected Overrides Sub Predict(InputCase As Microsoft.SqlServer.DataMining.PluginAlgorithms.MiningCase, PredictionResult As Microsoft.SqlServer.DataMining.PluginAlgorithms.PredictionResult)

PredictionResult.OutputAttributes.Reset() ‘an AttributeGroup object

Dim PredictThisAttributeBoolean = False

Dim PredictionCounter As UInt32 = 0 ‘for determining whether or not we’ve reached the limit that may be defined in PredictionResult.MaxPredictions

‘I’m not sure yet but I think MaxPredictions is unlimited when = 0

While PredictionResult.OutputAttributes.Next(AttributeSet.Unspecified) = True And PredictionCounter < PredictionResult.MaxPredictions

‘OK if the current attribute is input, or output, matches AttributeSet.GetAttributeFlags(1), but

Select Case PredictionResult.InputAttributeRestrictions

Case PredictionResult.InputAttributesTreatment.ExcludeInputAttributes And Me.AttributeSet.GetAttributeFlags(AttributeSet.Unspecified).ToString Like “*Input*”

PredictThisAttributeBoolean = False

Case PredictionResult.InputAttributesTreatment.PredictOnlyInputAttributes And Me.AttributeSet.GetAttributeFlags(AttributeSet.Unspecified).ToString Like “*Output*”

PredictThisAttributeBoolean = False

Case PredictionResult.InputAttributesTreatment.None ‘this one really belongs in the Case Else, but I want to demonstrate it

PredictThisAttributeBoolean = True

Case Else

PredictThisAttributeBoolean = True

End Select

If PredictThisAttributeBoolean = True Then

Dim AttributeStatsToAdd As New AttributeStatistics()

AttributeStatsToAdd.Attribute = AttributeSet.Unspecified ‘the target attribute

AttributeStatsToAdd.AdjustedProbability = MarginalStats.GetAttributeStats(AttributeSet.Unspecified).AdjustedProbability

AttributeStatsToAdd.Max = MarginalStats.GetAttributeStats(AttributeSet.Unspecified).Max

AttributeStatsToAdd.Min = MarginalStats.GetAttributeStats(AttributeSet.Unspecified).Min

AttributeStatsToAdd.Probability = MarginalStats.GetAttributeStats(AttributeSet.Unspecified).Probability

AttributeStatsToAdd.Support = MarginalStats.GetAttributeStats(AttributeSet.Unspecified).Support

‘test to see if the Missing state ought to be returned (SSDM will filter out data that is not requested in the query, but there’s no point wasting resources letting it get to that point

If PredictionResult.IncludeStatistics = True Then

For I As Integer = 0 To MarginalStats.GetAttributeStats AttributeSet.Unspecified).StateStatistics.Count – 1

If (I <> 0 And I > PredictionResult.MaxStates) Then Exit For ‘exit if we’ve hit the MaxStates, if there is a limit

If MarginalStats.GetAttributeStats(AttributeSet.Unspecified).StateStatistics(I).Value.IsMissing = True And PredictionResult.IncludeMissingState = False Then

‘don’t add this node, because it’s the missing state

Else ‘in all other cases, add it

‘AttributeStatsToAdd.StateStatistics.Add(MarginalStats.GetAttributeStats(AttributeSet.Unspecified).StateStatistics(CUInt(I)))

Dim SkewnessState As New StateStatistics()

SkewnessState.ValueType = MiningValueType.Other

SkewnessState.Value.SetDouble(SkewnessKurtosisClassArray(AttributeSet.Unspecified).Skewness)

‘don’t subtract 1 because Unspecified is zero-based

SkewnessState.AdjustedProbability = MarginalStats.GetAttributeStats(AttributeSet.Unspecified).StateStatistics(CUInt(I)).AdjustedProbability

SkewnessState.Probability = MarginalStats.GetAttributeStats(AttributeSet.Unspecified).StateStatistics(CUInt(I)).Probability

SkewnessState.Support = MarginalStats.GetAttributeStats(AttributeSet.Unspecified).StateStatistics(CUInt(I)).Support

SkewnessState.ProbabilityVariance = MarginalStats.GetAttributeStats(AttributeSet.Unspecified).StateStatistics(CUInt(I)).ProbabilityVariance

SkewnessState.Variance = MarginalStats.GetAttributeStats(AttributeSet.Unspecified).StateStatistics(CUInt(I)).Variance

AttributeStatsToAdd.StateStatistics.Add(SkewnessState)

End If

Next I

End If

If PredictionResult.IncludeNodeId Then

AttributeStatsToAdd.NodeId = AttributeStatsToAdd.NodeId = AttributeSet.GetAttributeDisplayName(AttributeSet.Unspecified, False)

End If

‘ Add the result to the predictionResult collection

PredictionResult.AddPrediction(AttributeStatsToAdd)

PredictionCounter = PredictionCounter + 1 ‘increment it only if a prediction is actually made (which it may not be if a comparison to the Input/Output to InputAttributeRestrictions leads to an exclusion

End If

End While

End Sub

…………This sample (I apologize for the sloppy code formatting, but the WordPress GUI is sometimes more challenging to work with than SSDM Extensibility) merely assigns the values of a column’s MarginalStats object to the new AttributeStatistics object, which ought to be a familiar operation at this point in the tutorial series. It then tests the IncludeStatistics member to see if we ought to include the NODE_DISTRIBUTION table, followed by a test of the IncludeMissingState property, then creates a new StateStatistics object if everything passes. The VALUETYPE of the single NODE_DISTRIBUTION table row this represents is set to MiningValueType.Other and the AdjustedProbability, Probability, Support, ProbabilityVariance (i.e. Standard Deviation, or StDev) and Variance are set to the corresponding values in the MarginalStats object for that column. The most important part is the Value.SetDouble statement, which sets the ATTRIBUTE_VALUE to the skewness statistics for that column, which we computed in previous tutorials. The StateStatistics object is then added to the AttributeStatistics object. Note how that section of code resembles the assignments of state stats in Post 14.4. You could conceivably add multiple rows of StateStatistics for each AttributeStatistics, or multiple AttributeStatistics for each column you return, depending on the needs of your algorithm. The next order of business is to add conditional logic to deal with instances in which the IncludeNodeID is True; in this case, I’m simply returning the full name of the output column. The newly populated PredictionResult is then added to the collection of predictions that will be returned by the method, the PredictionCounter variable I created is incremented, then the While loop either makes another pass or the method returns.

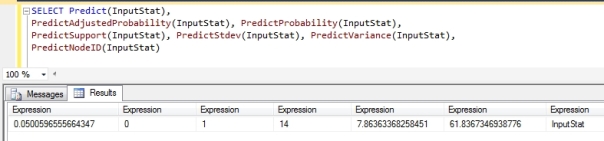

…………The logic you implement in the Predict method might be complex but the basics of its operation are not, in part because most of the PredictionResult properties are set by the query writer and therefore read-only. Debugging of the Predict method is far easier than with the AlgorithmNavigationBase class we discussed in the last post, which is in turn far less of a hassle than troubleshooting the other AlgorithmBase members and several orders of magnitude easier than dealing with errors in the AlgorithmMetadataBase class. One of the few issues I ran into initially were errors with text including the word “GetResultFromAttStats,” which might belong on Jeannine Takaki’s incredibly valuable thread on undocumented SSDM stored procedures. Presently, it is so undocumented that it only returns two hits on Google. One of these is an MSDN thread titled problem with managed code plug-in to analysis services which had no replies. I was fortunate to find this sentence in Gaël Fatou’s invaluable reply at the MSDN thread Managed Plugin-Algorithm: “…if (trainingStats.StateStatistics[0].Value.IsMissing) will not work, as it will always only consider the first state (which is always missing). The current state must be considered instead: if (trainingStats.StateStatistics[nIndex].Value.IsMissing).” This is due to an error in the SDK sample code that has apparently bitten at least one other plug-in developer, which necessitates the replacement of the [0] in the StateStatistics[0].Value.IsMissing statement with the proper state stats index. I never encountered GetResultFromAttStats errors after applying this solution, but first-time plug-in developers ought to be aware of this stumbling block in advance. I also received a couple of “Object reference not set to an instance of an object” errors that referenced GetAttributeFlags, a method of AttributeSet that I was calling incorrectly in my IncludeNodeID code. Another problem I encountered that was equally easy to fix was forgetting to enable the prediction functions I called in my test query, which led SQL Server Management Studio (SSMS) to return error messages with the text “…function cannot be used in the context” every time I ran the queries in Figures 3 and 4. It was a simple matter to edit the code of GetSupportedStandardFunctions in AlgorithmMetadataBase to return the correct SupportedFunction enumeration values. Out of the 37 available values, I selected PredictAdjustedProbability, PredictCaseLikelihood, PredictHistogram, PredictProbability, PredictSupport, PredictStdDev, PredictVariance, PredictScalar and PredictNodeId, which enabled me to return the results in the two figures below. I also enabled PredictTable, CustomFunctionBase and IsInNode for future experimentation. The .chm included with the SDK mentions that other Data Mining Extensions (DMX) functions like DAdjustedProbability, DNodeId, DStdDev, DVariance, DSupport, PredictAssociation and PredictSequence are also dependent on the output of the Predict method, but I didn’t implement some of these because they’re applicable to other types of algorithms, which are beyond the scope of this series. Note that the PredictHistogram function in the top graphic includes a row for the Missing state, while the first row contains the same values as the PredictSupport, PredictProbability, PredictAdjustedProbability, PredictVariance and PredictStDev functions in the bottom graphic. The Predict function at the bottom returns the same skewness value for the InputStat column we saw in last week’s post, as well as the name of the InputStat column for the PredictNodeID function. Most of these columns are standard return values in prediction queries, but in next week’s tutorial, I’ll demonstrate how to use custom mining functions to return more flexible result formats and perform tailor-made processing.

Figures 3 and 4: The Results of Various DMX Prediction Functions

A Rickety Stairway to SQL Server Data Mining, Part 14.4: Node Navigation

By Steve Bolton

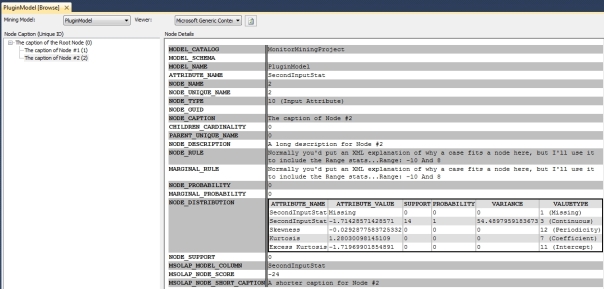

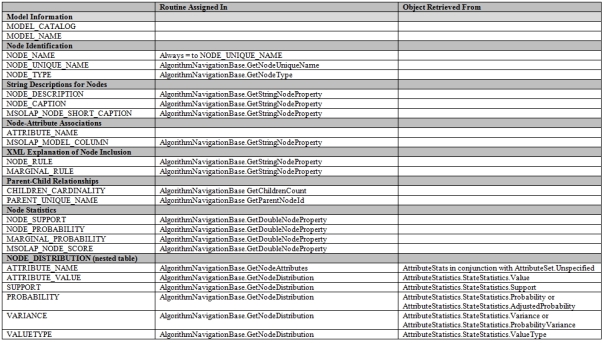

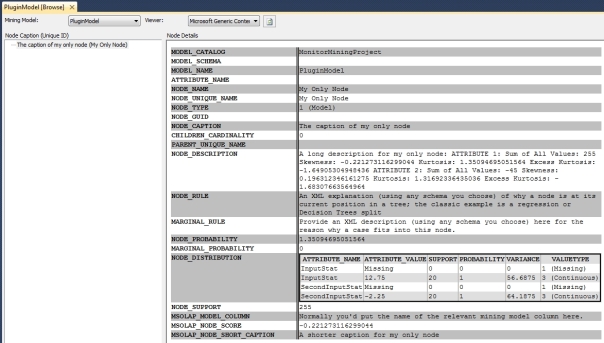

…………The pinnacle of difficulty in this series of amateur self-tutorials on SQL Server Data Mining (SSDM) was surmounted in the last installment, when we addressed the debugging and deployment of custom plug-in algorithms. From here on in, we will be descending back down the stairway, at least in terms of exertion required to squeeze practical benefit out of the lessons. In our first steps we’ll have to deal with a few aspects of plug-in development that were deferred in order to divide the tutorials into bite-sized chunks, particularly the topic of node navigation. The construction and navigation of a hierarchy of nodes out of our mining results takes place almost exclusively in the AlgorithmNavigationBase class, which we touched on briefly in A Rickety Stairway to SQL Server Data Mining, Part 14.2: Writing a Bare Bones Plugin Algorithm. In order to display rudimentary results in a single node in the Generic Content Viewer by the end of that article, it was necessary to return trivial or dummy values for many of the 18 methods in that class which were marked Must Override. The first bit of good news is that we’ve already introduced GetStringNodeProperty, GetDoubleNodePropert and GetNodeDistribution, the methods responsible for assigning the values of familiar columns from SSDM’s common metadata format, like NODE_DESCRIPTION, NODE_RULE, MARGINAL_RULE, NODE_PROBABILITY, MARGINAL_PROBABILITY, NODE_DISTRIBUTION, NODE_SUPPORT, MSOLAP_MODEL_COLUMN, MSOLAP_NODE_SHORT_CAPTION and NODE_CAPTION. The second good news item is that debugging of AlgorithmNavigationBase is much simpler than that of AlgorithmBase, where most of the number-crunching occurs. It is several orders of magnitude easier than debugging AlgorithmMetadataBase, where errors can be difficult to track down since they cause SQL Server Analysis Services (SSAS) to skip loading faulty algorithms when the service starts up. There are some pitfalls to constructing a node hierarchy out of your mining results, however, which can still be tricky to overcome.

…………Debugging of this class is much easier in part because we simply refresh the results in the Visual Studio GUI or SQL Server Management Studio (SSMS) in order to trigger breakpoints set here, whereas in AlgorithmBase or AlgorithmMetadataBase it is often necessary to reprocess mining models or restart the service. Restarts are still necessary after you deploy changes to the code, but not if you’re simply stepping through the results again. Expanding a closed node in the Generic Content Viewer may also cause various routines in AlgorithmNavigationBase to be called, which will activate any breakpoints you’ve set there in Visual Studio – assuming you’ve set them as prescribed in the last article. One of the more common errors you may see while debugging SSDM navigation may be worded like this: “Execution of the managed stored procedure GetAttributeValues failed with the following error: Exception has been thrown by the target of an invocation. The specified marginal node cannot be found in the mining model ‘PluginModel’.” The sentence indicates that the culprit may be an inappropriate choice of data mining viewer types, which are defined when the service starts by the GetViewerType routine of AlgorithmMetadataBase. I encountered this frequently while debugging this project because I initially returned a value of MiningViewerType.MicrosoftNeuralNetwork in that routine. The plug-in we developed a couple of tutorials ago merely returns a few global statistics like skewness, kurtosis and excess kurtosis, so it is not surprising that the neural net viewer would be a poor choice. Curiously, any attempt on my part to rectify it since that rookie mistake by substituting a different MiningViewerType enumeration value in GetViewerType has produced a more serious GetAttributeValues error. The text is the same except for the final line, which instead mentions the name of our practice plug-in algorithm at the end: “The targeted stored procedure does not support ‘Internal_Name_For_My_Algorithm’.” It apparently takes a little more work to change the viewer type of an algorithm once it’s been set, at least in some cases; it’s not a problem I’ve solved yet, but one plug-in developers should be wary of. It proved to be merely an inconvenience for the purposes of this series, since selecting Generic Content Viewer from the dropdown list in the Visual Studio GUI or SSMS works without errors whenever I return the original value of MicrosoftNeuralNetwork. Whenever I returned results in a new window in one of those tools I would receive the first GetAttributeValues error, then simply selected the Generic Content Viewer, which is the only one we need to worry about in a bare bones plug-in like this.

…………Developers might notice that there is no GetAttributeValues member in the object model or the DMPluginWrapper class it is derived from, via the means outlined in A Rickety Stairway to SQL Server Data Mining, Part 14.1: An Introduction to Plug-In Algorithms. Nor does either one expose the GetAttributeScores routine, which is mentioned in some of the arcane error messages SQL Server occasionally returns when processing the nine algorithms included with the product, which we covered much earlier in this series. These are internal routines within the SSAS process, msmdsrv.exe, which we can’t assign breakpoints to in Visual Studio, let alone debug and recompile. By good fortune, I stumbled across a list of 20 such undocumented internal routines at Jeannine Takaki’s invaluable Technet post A Guide to the (Undocumented) System Stored Procedures for Data Mining, at the last minute while writing up this article. She explains the uses of each in detail and how to use the CALL syntax on them in Data Mining Expressions (DMX) code. GetAttributeValues and GetModelAttributes are the only two undocumented routines on the list which can be used with any one of the nine out-of-the-box algorithms. GetAttributeScores applies specifically to neural nets and Linear Regression. Some of the routines are specific only to the two Microsoft-supplied clustering algorithms, such as GetClusterCharacteristics, GetClusterDiscrimination, GetClusterProfiles and GetClusters, whereas ARGetNodes; GetItemsets, GetRules and GetStatistics only apply to the Association Rules algorithm. Linear Regression and Decision Trees likewise are the only data mining methods which make use of CalculateTreeDepth, DTAddNodes, DTGetNodeGraph, DTGetNodes and GetTreeScores, while GetAttributeCharacteristics, GetAttributeDiscrimination, GetAttributeHistogram and GetPredictableAttributes can only be used with Naïve Bayes mining models. I have yet to experiment with them myself, but they all seem to be related to the retrieval of mining model results, which means you may encounter them when developing plug-ins that use the corresponding mining viewer types.

…………It is imperative to be conscious of the order in which SSDM triggers the 18 mandatory methods in AlgorithmNavigationBase when you retrieve the mining results in the GUI. The sequence begins in the GetNavigator routine of AlgorithmBase, which instantiates a new instance of AlgorithmNavigationBase, which in turn calls any code you’ve included in the constructor. In my case, all the New method does is set a reference to a class-scoped instance of the algorithm and a Boolean which indicates whether or not the navigation operation is being performed on a data mining dimension. I cannot verify that the rest of the sequence is the same for all mining models or all algorithms, but to date, I have seen SSDM invariably trigger the GetCurrentNodeID routine next. The next seven calls are to routines which set either the type or the name of the node, such as GetNodeType, GetNodeUniqueName, GetUniqueNameFromNodeID, GetNodeType again, a single call to GetStringNodeProperty to assign the NODE_CAPTION value, then GetNodeUniqueName and GetUniqueNameFromNodeID once more. I’m not sure what the purpose of repeating these calls to GetNodeType, GetNodeUniqueName and GetUniqueNameFromNodeID is; I only know that it occurs. The code for these routines is fairly trivial. GetNodeUniqueName has no parameters and merely calls GetUniqueNameFromNodeId, which merely converts the NodeID integer parameter supplied by SSDM into a string value. GetNodeIdFromUniqueName merely performs the same function as GetUniqueNameFromNodeID, except in reverse; in my example, I merely converted the NodeUniqueName supplied by SSDM as a parameter to the function and converted it to a 32-bit integer. I suppose other plug-in developers might have a genuine need to develop a much more complex naming scheme than in my trifling example of this class (which can be downloaded here), but for our purposes, there’s no real reason to complicate things. GetCurrentNodeID is likewise called frequently during the retrieval of mining results, but only returns the value of the CurrentNode, which is a class-scoped integer that identifies the node the navigation class is currently assigning the values for. In the implementations I’ve seen so far, such as the tutorial that founding SSDM developer Bogdan Crivat included in the plug-in software development kit (SDK) and Microsoft Business Intelligence with Numerical Libraries: A White Paper by Visual Numerics, Inc., the value of the CurrentNode is not set in this routine, merely returned. So how do we direct SSDM to operate on a different node – or for that matter, determine the number and order of the nodes? That doesn’t occur in GetNodeType, which merely returns a value of the NodeType enumeration to assign the NODE_TYPE value we’ve seen returned throughout this series in SSDM’s common metadata format. You might need to know the number of the node in order to assign the proper value, which can be any one of the following: AssociationRule; Cluster; CustomBase; Distribution; InputAttribute; InputAttributeState; Interior; ItemSet; Model; NaiveBayesMarginalStatNode; NNetHiddenLayer; NNetHiddenNode;NNetInputLayer; NNetInputNode; NNetMarginalNode; NNetOutputLayer; NNetOutputNode; NNetSubNetwork; None; PredictableAttribute; RegressionTreeRoot; Sequence; TimeSeries; Transition; Tree; TSTree; and Unknown. In other words, we’d assign None, Unknown or one of the 25 NODE_TYPE values we’ve seen often throughout this series. In my implementation, I performed a Select Case on the value of CurrentNode and set a type of Root when it equaled zero and InputAttribute in all other cases.

…………Decisions already have to be made based on the CurrentNode value, yet we have not yet assigned it in code. As mentioned in the last couple of tutorials, one of the trickiest aspects of debugging AlgorithmBase and AlgorithmMetadataBase is that calls to methods appear to come out of thin air, because they originate within msmdsrv.exe. It is a different expression of the same problem embodied in the hidden calls to undocumented methods, like GetAttributeValues and GetAttributeScores. In AlgorithmNavigationBase, this is manifested in apparently uncontrollable calls to the 18 mandatory methods, directed by msmdsrv.exe, which seem to leave developers without any well-defined place to specify the very node structure these routines operate on. That is not to say that these methods calls are random; msmdsrv seems to follow a fairly consistent pattern, in which the calls listed above are followed by an invocation of GetParentCount, then GetNodeAttributes. After this, GetNodeUniqueName is usually followed by another call to GetUniqueNameFromNodeId, then both are repeated again, followed by yet another call to GetNodeType. The NODE_CAPTION is typically set again in GetStringNodeProperty for some reason, followed by further calls to GetNodeUniqueName and GetUniqueNameFromNodeId. SQL Server then invokes GetChildrenCount and GetParentCount, followed by three calls to GetStringNodeProperty in which the NODE_DESCRIPTION, NODE_RULE and MARGINAL_RULE are set; NODE_PROBABILITY and MARGINAL_PROBABILITY are then set in two separate calls to GetDoubleNodeProperty; the structure of the NODE_DISTRIBUTION table is then set in a single call to GetNodeDistribution, followed by alternating calls to GetDoubleNodeProperty and GetStringNodeProperty to set the NODE_SUPPORT, MSOLAP_MODEL_COLUMN, MSOLAP_NODE_SCORE and MSOLAP_NODE_SHORT_CAPTION. It was trivial to set the values of these in Post 14.2, when we were only dealing with a single node. If we’re dealing with multiples nodes, we’d need to insert Select Cases on the CurrentNode value to assign the correct values of these columns to the appropriate nodes, but it wouldn’t normally be suitable to change the value of CurrentNode itself here. After this, SSDM usually calls LocateNode twice, which merely invokes ValidateNodeID, i.e. the routine where you’d embed any custom code to authenticate your node structure; in my algorithm, I simply added code to throw an error if the CurrentNode value exceeded the count of the number of attributes. Somewhere in this vicinity, SSDM will call GetChildrenCount and sometimes MoveToChild, as well as GetParentNodeID and MoveToParent in some cases. Correctly specifying the cases when those particular routines are hit is the key to constructing your node network, not through a call to a method that simply declares the size and shape of the whole network explicitly. After this, SSDM will usually call GetCurrentNode and repeat this whole sequence over again until it reaches the final node – which it may never do, if you don’t specify the node structure correctly.

…………In a typical program, you’d expect to set a fixed number of nodes, perhaps in a class-scoped multi-dimensional array or collection that also determined the structure of the relationships between them. In SSDM plug-ins, however, the structure is specified at almost primordial levels, through calls to methods that determine the counts of each nodes parents and children. The numbers returned by GetParentCount and GetChildrenCount in turn determine how many times SSDM will iterate through MoveToParent and MoveToChild respectively. This group of methods also includes GetChildNodeId and GetParentNodeID, in which you merely return the parameter value supplied by SSDM, as far as I can tell. In my implementation, I merely wanted to return a root node with global statistics, followed by two child nodes representing two columns of input data I supplied to my mining model. Since the root node has no parents, I returned zero for GetParentCount when the CurrentNode was equal to zero, i.e. the unique index for the single root node; since the two input attributes have just one parent, I returned a value of one in all other cases. In GetChildrenCount, however, I returned a value equal to the number of input attributes when the CurrentNode matched the root node. Since the root node is the only parent, I returned zero when the CurrentNode is equal to any other value, since these are input attributes, which have only one parent in this case. The CurrentNode value is only changed in one place in this trifling plug-in, in the MoveToChild routine, where it is increased by one in each iteration. The number of iterations will be equal to the value assigned to the single root node in GetChildrenCount. In my plug-in, MoveToParent is never invoked because the only parent specified in GetParentCount has already been passed once, but it may be triggered in structures that require more complex implementations of GetParentCount and GetChildrenCount. This means of specifying the node structure is so basic that simple errors in your logic can cause this sequence of method calls to repeat itself indefinitely, until you receive a warning from SSMS that the XMLA query timed out. If you plan to develop plug-ins, expect to see a lot of these. Also expect to set aside more time than usual for the code that specifies your node structure, since it is done in an unconventional way that is not easy to control. I would prefer to deal with explicit definitions of those structures in old-fashioned class-scoped arrays or collections, which might be easier to manage when dealing with particularly complex node setups; yet perhaps there are sounds reasons for the way Microsoft implemented this, maybe as a performance enhancement of some kind. Just keep in mind that most of the time you spend in AlgorithmNavigationBase will be devoted to specifying your node setup. Most of its methods are trivial, sometimes to the point of merely returning the values SSDM supplies in function parameters. Likewise, even the most complex node setups may not require many lines of code, but that doesn’t mean they won’t be a challenge to arrange properly. It is a good idea to have a clear idea of the structure you want beforehand, then to keep a close eye on the conditional logic in GetChildrenCount and GetParentCount, as well as paying close attention to the way in which you increase the value of CurrentNode or any other index placeholder variable you might use. Expect to iterate through the sequence of method calls outlined above many times during debugging before you get it right; it is not a bad idea to set breakpoints in many of them keyed to the Hit Count or Condition (which can be set in Visual Studio by right-clicking on the breakpoints).

…………As Crivat points out in the tutorial he wrote for the SDK, there may be cases in which a single node may have multiple parents, such as tree structures that represent graphs, but these instances are too extraordinary to warrant a full discussion. I should at least mention MoveToNextTree, a mandatory method Microsoft included to enable unusual structures with more than one root node. As the help file included with the SDK states, “Positions the navigator to the next content tree, if your algorithm’s content is laid out as multiple trees…Usually, all the content can be represented as a single tree and this function should return false. It is useful to implement this function when your content graph is disjoint, to ensure that all nodes can be traversed in a content query.” As I’ve said many times in this series and in others like An Informal Compendium of SSAS Errors, troubleshooting Analysis Services is sometimes like exploring deep space with the crew of the Enterprise, since you may be boldly going where no man has gone before. The MoveToNextTree method is another one of those features of SSAS for which there is apparently no documentation anywhere on the planet aside from the single line of code in sample files Crivat includes with his tutorials, and a single brief C++ example at the MSDN webpage A Tutorial for Constructing a Plug-in Algorithm. The latter doesn’t do much, but says “This function sets _iIDNode to be the root of the next tree in the sequence. Note that if we are in browser mode, there will never be a next tree.” The only useful documentation I could find is the sentence quoted above from the .chm file. Undaunted, I decided to experiment by simply returning a value of True in all cases, which will create an infinite loop that eventually triggers the XMLA timeout warning. The code in Figure 1 worked because it included a limit on the number of times the routine returns True. Each time MoveToNextTree returns that value, it not only iterates through the sequence of method calls we discussed earlier, but does so for each node in that structure. Intrepid programmers with a need for disjoint graphs (i.e. sets with no common members) could add conditional logic to the other methods we’ve already discussed to return different values, depending on which of the separate tree structures is being iterated through. In my case, the code below merely added three nodes under the root, on the same level as the other two inputs, although I suppose I could have included conditional logic in other routines to create secondary roots or the like.

Figure 1: Some Sample MoveToNextTree Code

Static TreeCount As Int16

If TreeCount < 3 Then TreeCount = TreeCount + 1

Return True

Else

Return False

End If

…………The remaining functionality we have yet to discuss with AlgorithmNavigationBase all pertains to means that we have yet to discuss for supplying values for columns in the SSDM’s common metadata for a particular node. For the sake of brevity, I left a discussion of GetNodeAttributes out of Post 14.2, even though it’s relatively inconsequential. It merely specifies the values assigned to the ATTRIBUTE_NAME column by supplying the names assigned already assigned to your attributes, which in my case correspond to mining model columns labeled “InputStat” and “SecondInputStat.” All you need to do is supply the index of the attribute in your AttributeSet to the return variable, which is an array of unsigned integers. To create a comma-separated list of attributes associated with a particular node, return more than one value in the array. This week’s tutorial also features a more complex implementation of the NODE_DISTRIBUTION table than the one returned for a single node a couple of posts ago. The Select Case returns the marginal stats for the whole model when the CurrentNode is equal to the index of the root, but numbers specific to the InputStat or SecondInputStat column for the two child nodes. First I add the marginal stats for the appropriate column to a collection of AttributeStatistics objects, which in turn specifies the values for the first few rows of its NODE_DISTRIBUTION table. The real difference between this and the code we used a few weeks ago is that we’re also including three custom NODE_DISTRIBUTION rows, by adding three extra AttributeStatistics objects whose StateStatistics objects have custom values. First I instantiate the new AttributeStatistics objects, then the three StateStatistics objects which are added to them at the end of the Case statement. The VALUETYPE for each custom NODE_DISTRIBUTION row is set using the MiningValueType iteration, as depicted in Figure 2. Immediately after that, I assign the ATTRIBUTE_VALUE to either the skewness, kurtosis or excess kurtosis value we associated with that attribute in previous tutorials. I’ve left the NODE_SUPPORT, NODE_PROBABILITY and VARIANCE blank in these cases, but set the ATTRIBUTE_NAME (which should not be confused with the column of the same name which is one level higher in the hierarchy of SSDM’s common metadata format0 using the NodeID property. Ordinarily it might also be a good idea to logic in this routine to do conditional processing based on the NODE_TYPE, but I didn’t want to complicate this tutorial further by nesting Select Cases and other such statements inside each other.

Figure 2: Adding Custom Rows to the NODE_DISTRIBUTION Table

Case Else

Dim TempStats As AttributeStatistics() = New AttributeStatistics(3) {}

TempStats(0) = CurrentAlgorithm.MarginalStats.GetAttributeStats(CurrentNode – 1)

TempStats(1) = New AttributeStatistics

TempStats(2) = New AttributeStatistics

TempStats(3) = New AttributeStatistics

Dim SkewnessState, KurtosisState, ExcessKurtosisState As New StateStatistics()

SkewnessState.ValueType = MiningValueType.Other

KurtosisState.ValueType = MiningValueType.Coefficient

ExcessKurtosisState.ValueType = MiningValueType.Intercept

SkewnessState.Value.SetDouble(CurrentAlgorithm.SkewnessKurtosisClassArray(CurrentNode – 1).Skewness)

KurtosisState.Value.SetDouble(CurrentAlgorithm.SkewnessKurtosisClassArray(CurrentNode – 1).Kurtosis)

ExcessKurtosisState.Value.SetDouble(CurrentAlgorithm.SkewnessKurtosisClassArray(CurrentNode – 1).ExcessKurtosis)

TempStats(1).StateStatistics.Add(SkewnessState)

TempStats(2).StateStatistics.Add(KurtosisState)

TempStats(3).StateStatistics.Add(ExcessKurtosisState)

‘we have to set the ATTRIBUTE_NAME string that appears next to these stats in the parent AttributeStats object, for whatever reason

TempStats(1).Attribute = AttributeSet.Unspecified

TempStats(1).NodeId = “Skewness”

TempStats(2).Attribute = AttributeSet.Unspecified

TempStats(2).NodeId = “Kurtosis”

TempStats(3).Attribute = AttributeSet.Unspecified

TempStats(3).NodeId = “Excess Kurtosis”

Return TempStats

…………The results for the updated code I’ve available here produced the three nodes depicted in Figures 3 through 5. The first NODE_DISTRIBUTION table includes the marginal stats for the whole model, plus some values for NODE_CAPTION, NODE_DESCRIPTION and other such columns that we assigned in GetDoubleNodeProperty and GetStringNodeProperty a couple of tutorials ago. Note how the NODE_DISTRIBUTION tables for the second and third figures include custom rows featuring the values for the skewness, kurtosis and excess kurtosis associated with the column mentioned in the ATTRIBUTE_NAME column (the one at the top of each figure, not in the NODE_DISTRIBUTION tables. I have yet to solve the mystery of why the VALUETYPE for the skewness rows is set to Periodicity, when the value supplied in code is MiningValueType.Other, but that is insignificant at this point. The mystery of how the Generic Content Viewer columns are populated in plug-in algorithms is essentially solved; would-be plug-in writers need only decide what they want to appear in them, rather than worrying about how to get the values in there. Only a few enigmas remain in the attic at the top of this Rickety Stairway, such as how use the Predict method, advanced functionality like custom mining functions and Predictive Model Markup Language (PMML). After those four plug-in tutorials are finished, we’ll unravel one final mystery to end the series, how to write custom data mining viewers. Discussion of GetNodeIDsForCase, a specialized AlgorithmNavigationBase method, will be deferred until then because it implements drillthrough to mining model cases, which can’t be done in the Generic Content Viewer. Since the more useful and attractive mining viewers that ship with the product depend on the same values as the Generic Content Viewer, it would be fairly easy to upgrade this sample AlgorithmNavigationBase code to create structures that are compatible with them, such as hierarchies than can be displayed by the Decision Trees Viewer. It wouldn’t be child’s play and the code would be lengthy enough to unduly clutter this series, but once you grasp the basic principles of how to navigate through the appropriate structure, upgrading this skeleton code into custom algorithms that can be displayed in the out-of-the-box mining viewers becomes feasible. The last tutorial in this series will address scenarios where those viewers don’t meet the needs of end users, which calls for custom means of displaying data in the Visual Studio GUI or SSMS.

A Rickety Stairway to SQL Server Data Mining, Part 14.3: Debugging and Deployment

By Steve Bolton

…………Throughout this series of amateur self-tutorials in SQL Server Data Mining (SSDM), I’ve often said that working with Analysis Services is a bit like blasting off into space with the Starship Enterprise, because you may be boldly going where no man has gone before. My An Informal Compendium of SSAS Errors series remains one of the few centralized sources of information on certain arcane SQL Server Analysis Services (SSAS) errors, some of which turn up no hits on Google and appear to have no extant documentation anywhere on the planet. SSDM is one of the most powerful yet under-utilized components of Analysis Services, which represents Microsoft’s contribution to the cutting edge field of data mining, so we’re venturing even further into uncharted territory in this series. Within SSDM, the most powerful but obscure feature is the extensibility mechanism for writing custom algorithms, so that takes us even further out on a limb. Debugging is likewise one of the most trying tasks in the computing field, since it often involves delving into the unknown to find the causes of errors that may be completely unique to a particular user, system or piece of software. It is thus not surprising that debugging SSDM plug-in algorithms is perhaps the most difficult step to climb in this Rickety Stairway.

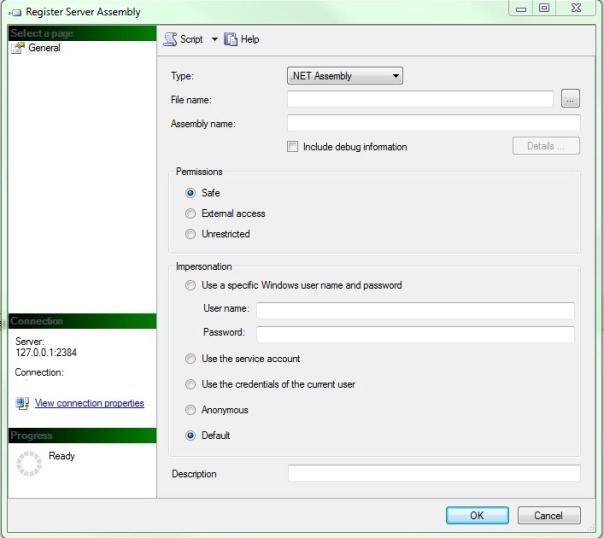

…………To complicate matters even further, deployment bugs are often the most difficult to ferret out in any type of software, due to the endless variety of system configurations it may be installed on, not to mention the fact that it is difficult to debug a program that isn’t even running yet. This issue is more prominent in the deployment of SSDM algorithms, for the simple reason that Visual Studio debugger will only be triggered on breakpoints in your custom code or the DMPluginWrapper.dll it references, not in the SSAS process (msmdsrv.exe) that calls it. In the last two tutorials I gave examples of how to write a custom algorithm in Visual Basic.Net classes and compile the DMPluginWrapper file, in which you can set breakpoints at any line of code you prefer. After that it is a matter of starting Analysis Services, then using the Attach to Process command in Visual Studio to link the debugger to the msmdsrv process and instruct it to break on both native and .Net code (in the case of my project, the .Net version was 4.0). Whenever breakpoints in the methods of the three main plug-in classes that we discussed in last week’s tutorial are hit, or those methods call breakpoints in the original DMPluginWrapper class they’re derived from, Visual Studio should break. One of the leading challenges of debugging these projects is that sometimes they don’t break when you’d expect them to, primarily because msmdsrv calls these methods in an order that is not readily apparent to programmers. For example, in an ordinary desktop .Net program, the call stack would begin at the breakpoint, then move to whatever routine called the routine the breakpoint, and so on until you eventually hit a Sub Main or similar entry point at the beginning of the application. With SSDM plug-ins, the call stack often starts with a single routine in your project, which leads back to a routine in the DMPluginWrapper file, which in turn is called by internal msmdsrv.exe routines that you can’t debug or control the execution of. As a result, tracking down the causes of errors is much trickier than in ordinary applications, because you can’t always tell for certain which line of code caused a particular error, or even determine why a routine in your code was called rather than another. The call stack is essentially decoupled, which makes it appear at first as if some errors are being generated from thin air out of left field. In these cases the errors usually turn out to be in your plug-in code, but are only detected in the space in the call stack taken up by internal msmdsrv methods. As mentioned last week, my aim for now is merely to provide the leanest possible version of a VB plug-in, merely to illustrate how SSDM can be directed to return any results at all. Even a bare bones algorithm missing prediction, navigation, feature selection, parameters and other functionality is not feasible without first surmounting the challenge of deploying anything at all. The daunting dilemmas of debugging are readily apparent from the beginning of the deployment phase, which makes it necessary to discuss the two topics together, before fleshing out more advanced features of plug-ins.

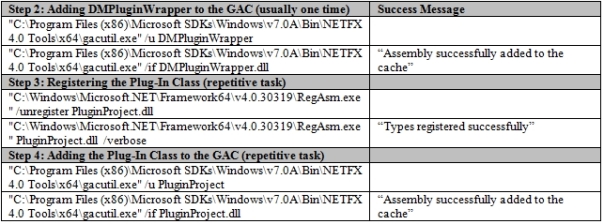

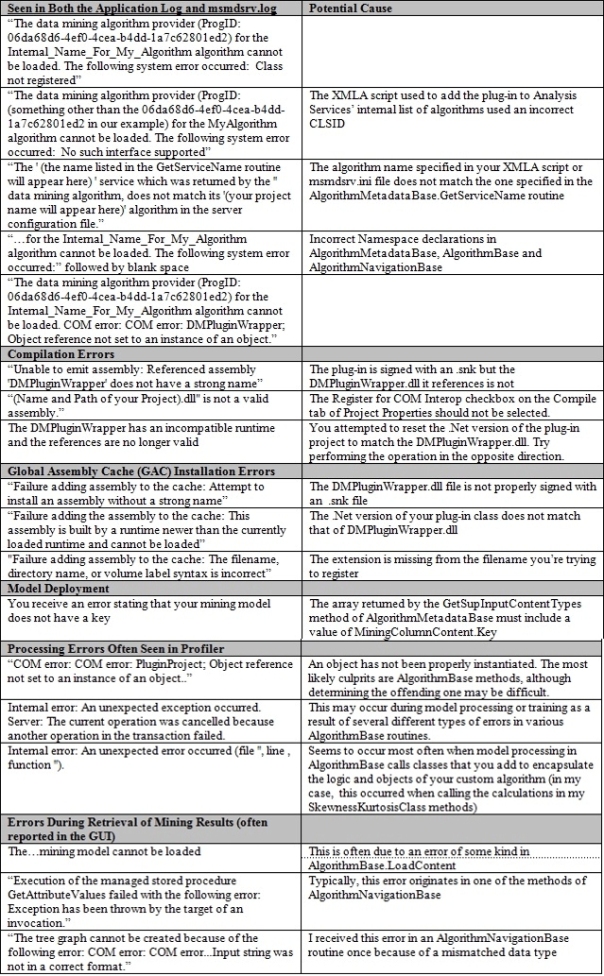

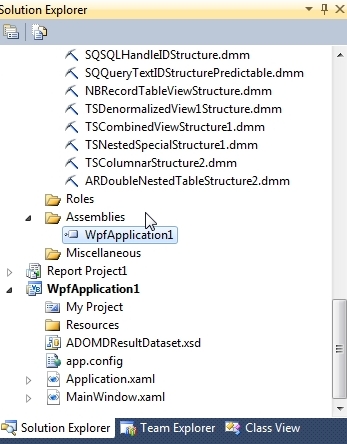

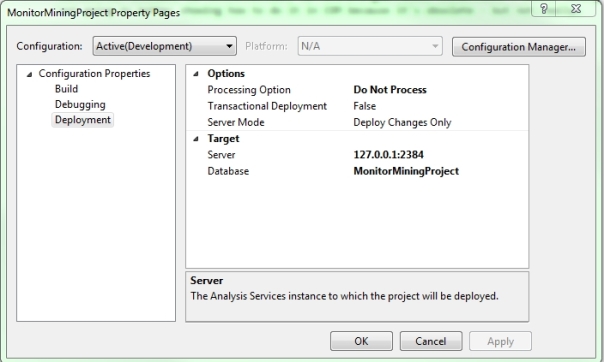

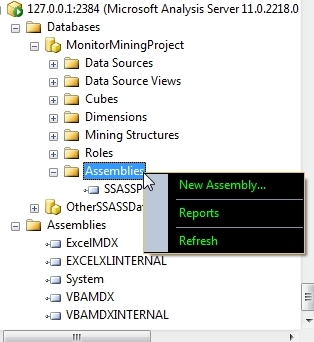

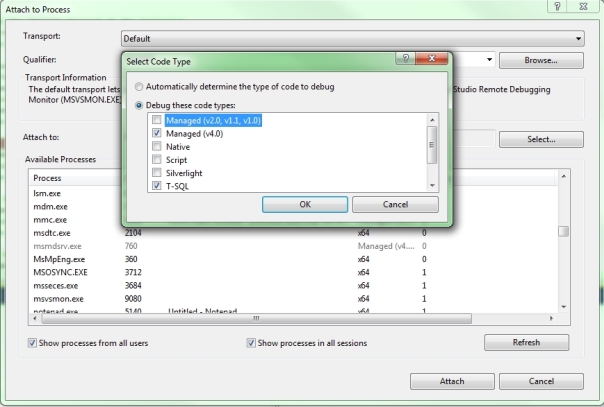

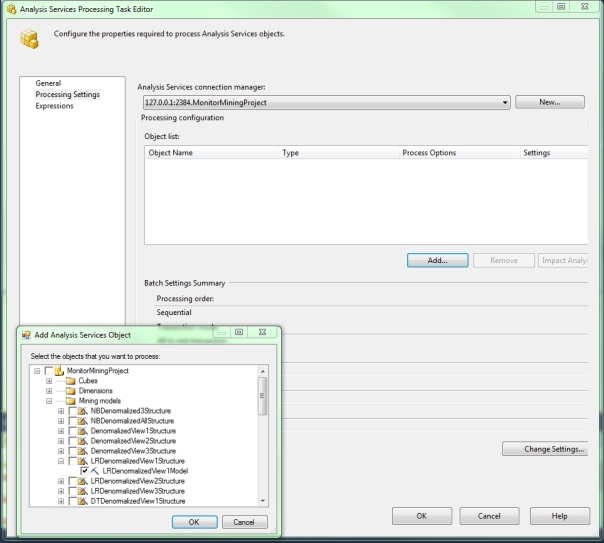

…………The process of deployment consists primarily of eight steps, including 1) generating .snk Strong Name Key files for your DMPluginWrapper and plug-in class in order to debug them, which only needs to be done once before adding them to the Global Assembly Cache (GAC); 2) adding the DMPluginWrapper to the GAC, as well as removing it first if it already exists; 3) compiling the latest version of the project; 4) registering your plug-in class as a COM class and if necessary, unregistering the old version first; 5) adding the plug-in class to the GAC, as well as removing any old versions first; 6) informing SSAS of the existence of the new algorithm; 7) restarting the SSAS service; then 8) using Attach to Process as outlined above to begin debugging. after that, you merely need to trigger the appropriate routines in your code, such as processing a mining model to trigger the InsertCases and ProcessCase routines in AlgorithmBase or refreshing the GUI view of mining model results in SQL Server Management Studio (SSMS) or Visual Studio to trigger various routines in AlgorithmNavigationBase, all of which we discussed in depth in last week’s article. All but two of these steps must be performed repeatedly during plug-in development, so I highly recommend placing most of the command line prompts I’ll mention hereafter in a .bat file that can perform them all with a single keystroke. One of those exceptions is Step #6, which only has to be performed once for any plug-in you write. The easy way is to run the XML for Analysis (XMLA) script below, substituting the GUID of your algorithm in the CLSID value and the name of your algorithm for mine, “Internal_Name_For_My_Algorithm.” If the name does not match the one specified in the AlgorithmMetadataBase.GetServiceName routine we discussed last week, you’ll see an error like this in your msmdsrv.log and Application event log: “The ‘ (the name listed in the GetServiceName routine will appear here) ‘ service which was returned by the ” data mining algorithm, does not match its ‘(your project name will appear here)’ algorithm in the server configuration file.”

Figure 1: Sample XMLA Code to Register a Plug-In

<Alter

AllowCreate=“true”

ObjectExpansion=“ObjectProperties”

xmlns=“http://schemas.microsoft.com/analysisservices/2003/engine“>

<Object/>

<ObjectDefinition>

<Server

xmlns:xsd=“http://www.w3.org/2001/XMLSchema”

xmlns:xsi=“http://www.w3.org/2001/XMLSchema-instance“>

<Name>.</Name>

<ServerProperties>

<ServerProperty>

<Name>DataMining\Algorithms\Internal_Name_For_My_Algorithm\Enabled</Name>

<Value>1</Value>

</ServerProperty>

<ServerProperty>

<Name>DataMining\Algorithms\Internal_Name_For_My_Algorithm\CLSID</Name>

<Value>06da68d6-4ef0-4cea-b4dd-1a7c62801ed2</Value>

</ServerProperty>

</ServerProperties>

</Server>

</ObjectDefinition>

</Alter>

Figure 2: Newly Deployed Plug-In Depicted in the SSAS .ini File

<Internal_Name_For_My_Algorithm>

<Enabled>1</Enabled>

<CLSID>06da68d6-4ef0-4cea-b4dd-1a7c62801ed2</CLSID>

</Internal_Name_For_My_Algorithm>

…………If the XMLA script runs successfully, then after restarting the service you’ll see the change reflected in the msmdsrv.ini file. Under the ConfigurationSettings\Data Mining\Algorithms node, you’ll find the nine out-of-the-box algorithms listed, all prepended with the name “Microsoft” and sans any GUID tag. For each custom plug-in, you’ll see a listing like the one in Figure 2, in which the opening and closing tags are equivalent to the service name of the algorithm I developed in last week’s tutorial. Note that some versions of the XMLA script listed in other tutorials will set the Enabled value to True rather than 1, which doesn’t seem to cause any unwanted side effects I’m yet aware of, but I changed from the Boolean to the integer value to be consistent with the format used in the .ini file for the out-of-the-box algorithms. There really aren’t many ways to mess up the XMLA script, which only has to be run correctly one time for each plug-in. While troubleshooting my deployment problems I tried substituting incorrect names and GUID values that did not yet exist in the registry, which led to errors like these in the Application log and msmdsrv file: “The data mining algorithm provider (ProgID: 06da68d6-4ef0-4cea-b4dd-1a7c62801ed2) for the Internal_Name_For_My_Algorithm algorithm cannot be loaded. The following system error occurred: Class not registered” and “The data mining algorithm provider (ProgID: (a GUID other than the 06da68d6-4ef0-4cea-b4dd-1a7c62801ed2 sample value we’re using here) for the MyAlgorithm algorithm cannot be loaded. The following system error occurred: No such interface supported.” When editing the .ini manually instead of using the XMLA script, the range of errors is only limited by one’s imagination; it’s a bit like the old proverb, “God put obvious limits on our intelligence, but none whatsoever on our stupidity.” Nonetheless, I like to at least manually inspect the .ini after running an XMLA script like this or receiving other deployment errors, just to make sure; that is how I caught the subtle difference between the data types for the Enabled tag, for example. When manually editing the .ini file, don’t edit the <Services> tag under <DataMining> to add your plug-in class, because it’s strictly for built-in algorithms.[i] I recommend keeping shortcuts to the .ini and SSAS log files in a handy place, because you’re going to need to check them repeatedly when testing plug-in algorithms.

…………The script only needs to be run one time for each plug-in, but you obviously can’t run it successfully before compiling and registering your class at least once prior to this. The first step in the process also only needs to be performed once, although there is more room for error in compiling your projects with Strong Name Key files. The other six steps in the deployment process will be repeated ad infinitum, ad nauseum while you debug your code, so we’ll discuss them separately, even though there is some overlap between these topics. The fun begins with generation of the CLSID you see in the XMLA script above, which uniquely identifies your algorithm in the registry and can be generated through a wide variety of tools familiar to Visual Studio and SQL Server programmers, like guidgen.exe, the Create GUID menu function and the NewID() function. The best option when working with VB projects, however, is to check the Sign the assembly checkbox under the Signing tab of Project Properties, which will generate a GUID for you; copy it from the Assembly Information dialog under Project Properties and put it in the GUID tag that adorns your AlgorithmMetadataBase class declaration before compiling the project. This should be identical to the CLSID in the XMLA script. In the Assembly Information box, also select the Make assembly COM Visible checkbox. Do not, however, select the Register for COM Interop checkbox on the Compile tab, otherwise you may receive the following error during compilation: “(Name and Path of your Project).dll” is not a valid assembly.” Back on the Signing tab, you must also add a Strong Name Key file generated by using the Visual Studio sn.exe tool. The DMPluginWrapper.dll file you referenced must also be signed with an .snk and recompiled first, otherwise Visual Studio won’t break on exceptions or hit your breakpoints during debugging, nor will it be installed correctly in the GAC. At one point I received the error, “Unable to emit assembly: Referenced assembly ‘DMPluginWrapper’ does not have a strong name” because I signed my plug-in class but not the DMPluginWrapper.dll it referenced. So I took the easy way out and simply removed signing from my plug-in, which won’t work because you’ll encounter the following error when trying to add DMPluginWrapper to the GAC: “Failure adding assembly to the cache: Attempt to install an assembly without a strong name” I adapted the examples provided by Inaki Ayucar at the CodeProject.com webpage How to Sign C++/CLI Assemblies with a Strong Name and Prasanjit Mandal at the MSDN thread Failure Adding Assembly to the Cache: Attempt to Install an Assembly without a Strong Name and ended up with command line text like this: sn.exe -k DMPluginWrapper.snk. I didn’t need to specify the full path to the file, but as the saying goes, Your Mileage May Vary (YMMV). It is also a good idea to verify the signing by using the –v switch on sn.exe. It is critical not to forget to add the following tag to your DMPluginWrapper’s AssemblyInfo.cpp file, substituting the name of the .snk you just generated: [assembly:AssemblyKeyFile(“DMPluginWrapper.snk”)]. Then rebuild the DMPluginWrapper project and reset the reference to the .dll in your plug-in class project. Add an .snk for that project as well on the Signing tab, then rebuild it as well. Compilation should occur with errors at this point and adding both classes to the GAC should now be feasible – provided you do not stumble over one of the following hurdles when adding the assemblies.